Today’s topic is about VMware Cloud Foundation and homogenous network mapping with additional PCIe interfaces within a server.

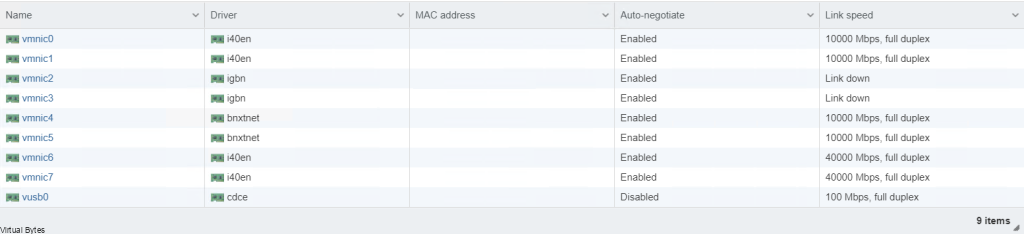

| Physical Port | Device Alias |

| Onboard port 1 | vmnic0 |

| Onboard port 2 | vmnic1 |

| Onboard port 3 | vmnic2 |

| Onboard port 4 | vmnic3 |

| Slot #2 port 1 | vmnic4 |

| Slot #2 port 2 | vmnic5 |

| Slot #4 port 1 | vmnic6 |

| Slot #4 port 2 | vmnic7 |

VMware KB – How VMware ESXi determines the order in which names are assigned to devices (2091560) this KB talks about vmnic ordering and assignment, but the post below will explain when a NVMe PCIe disk is apart of a host.

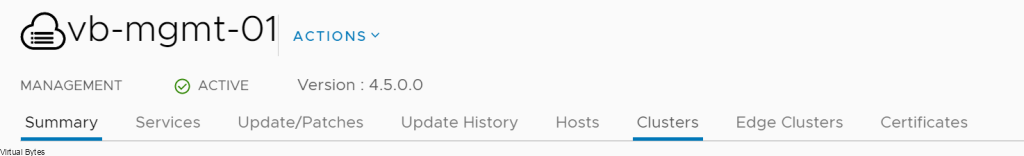

What kind of environment? – VMware Cloud Foundation 4.x

If a system has:

- Four onboard network ports

- One dual-port NIC in slot #2

- One dual-port NIC in slot#4

Then devices names should be assigned as:

The problem:

If a physical server has additional PCIe interfaces that are greater in quantity over another server that you want to bring into an existing or new cluster.

An example – Dell PowerEdge R740, with 24 NVMe PCIe SSD Drives, and 2 – QSFP Mellanox 40Gig PCIe, and 1 – NDC LOM Intel X710 Quad 10Gb SFP+, and Boss Card, but another server that has few drives less than 24 as example above but the same network cards as following (2 – QSFP Mellanox 40Gig PCIe, 1 – NDC LOM Intel X710 Quad 10Gb SFP+, and Boss Card)

This will cause the physical server to have its PCIe Hardware IDs shift by (N) and cause the vmnic mapping to be out of order where certain vmnics will show up out of order, which causes the homogeneous network misconfiguration layout for VMware Cloud Foundation ESXi Hosts that are apart of a workload domain. It is important to have identical hardware for a VCF implementation to have successful VCF deployment of a workload domain.

This type of issue would cause problems for any future deployments of VMware Cloud Foundation 4.x. If they have an existing cluster with either type of configurations: high density compute node, or a vGPU node, or a high-density storage node it would throw off the PCIe mapping to and prevent all esxi hosts to have a homogeneous vmnic mapping to the physical nic.

The Fix:

Before you start doing any configurations with your ESXi host within VCF, please make sure to Decommission that host from your cluster within that workload domain.

Once the host is removed from that cluster within the workload domain:

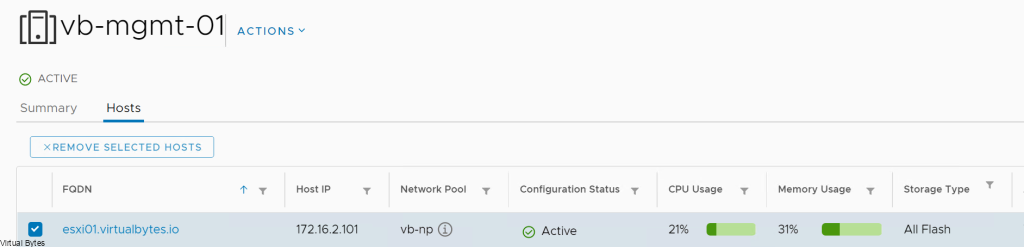

Go to – > Workload Domains -> (Your Domain) ->

Clusters -> Hosts (Tab) -> Select the host you want to remove

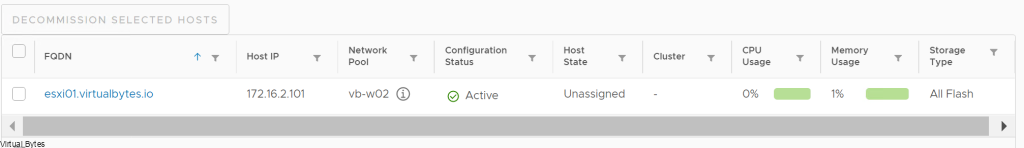

Then Go back to Main SDDC Page – > Hosts -> and Decommission that select ESXi Host

Once, host is decommissioned, wipe all your NVMe Disks first, and then make sure to shutdown the ESXi host and unplug the NVMe disks just slightly to ensure that they do not get powered on, so then the next re-image of your ESXi host there will only be 1 disk which should be your boot drive or a Boss SSD M.2.

After server is up login back into your ESXi host and it should match to your liking where all the vmnics are aligned and correctly showing up in a homogenous layout

The Results:

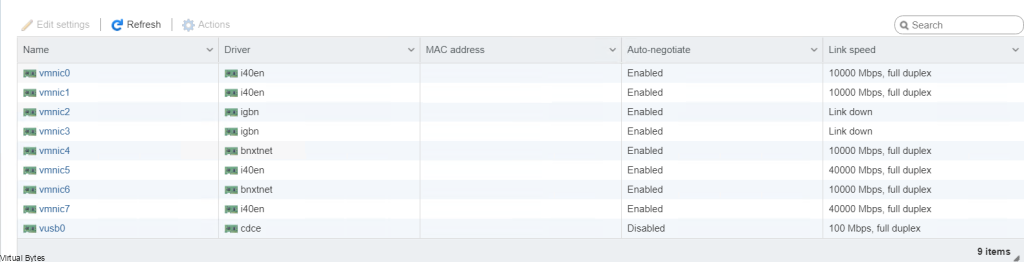

Before

After