A little about what VMware Cloud Director is – it is a CMP or also known as a cloud managment plane which supports, pools and abstracts the VMware virtualization infrastructure as (VDC) Virtual Data Centers. A provider can offer many different flavors and specifcations of a Tenant to a customer, it could be a Gold, Silver or Bronze types of capacity and tiering which allows a good allocation model depending on a customer that needs a higher guarenteed resource usage or allocation where as a lower tier customer wants to test few software solutions they could use a bronze tier and be able to save costs.

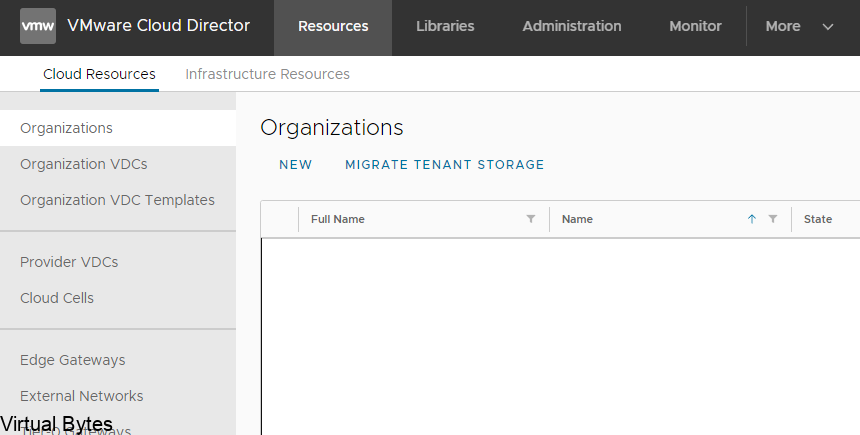

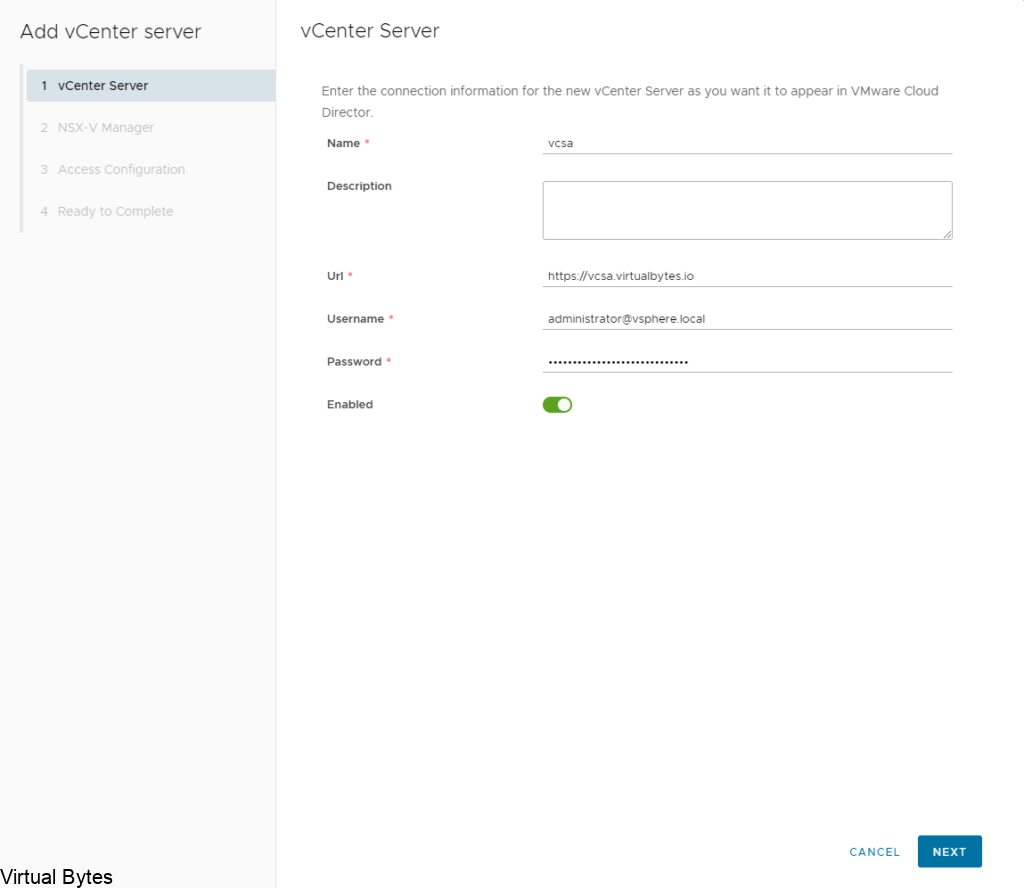

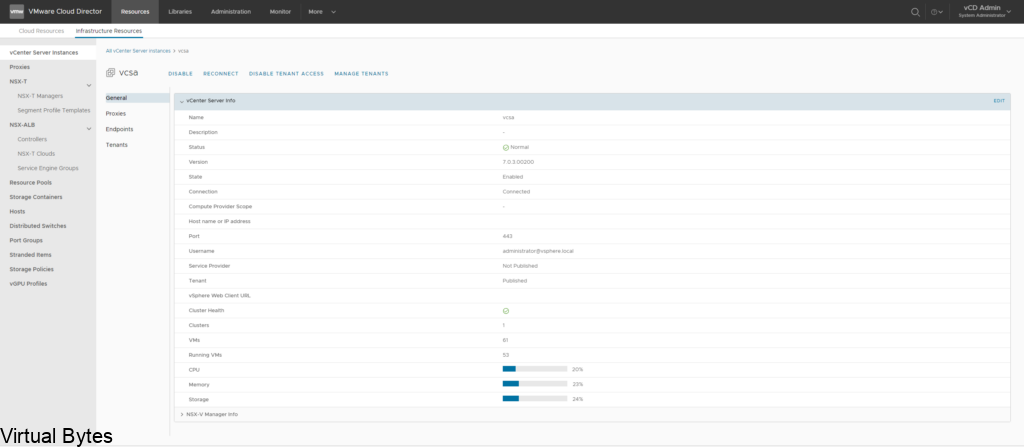

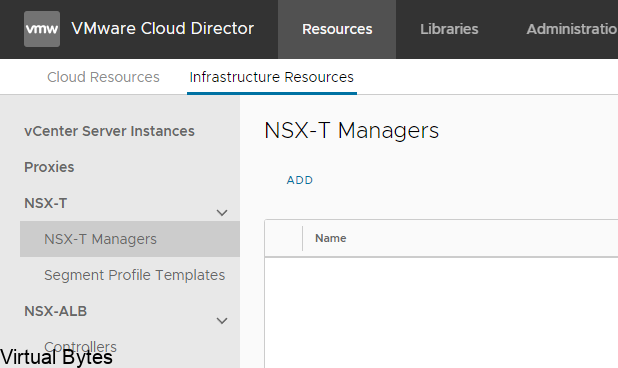

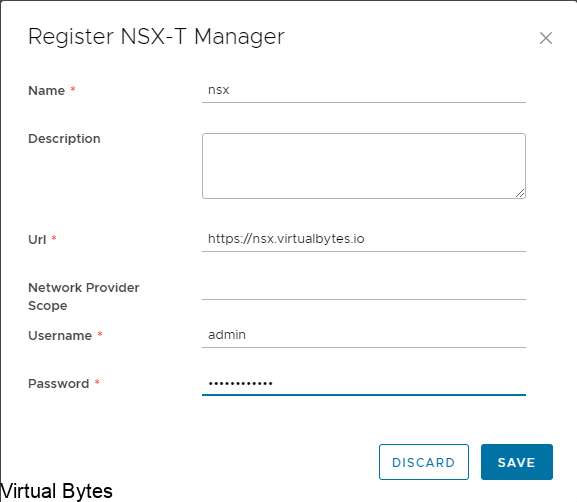

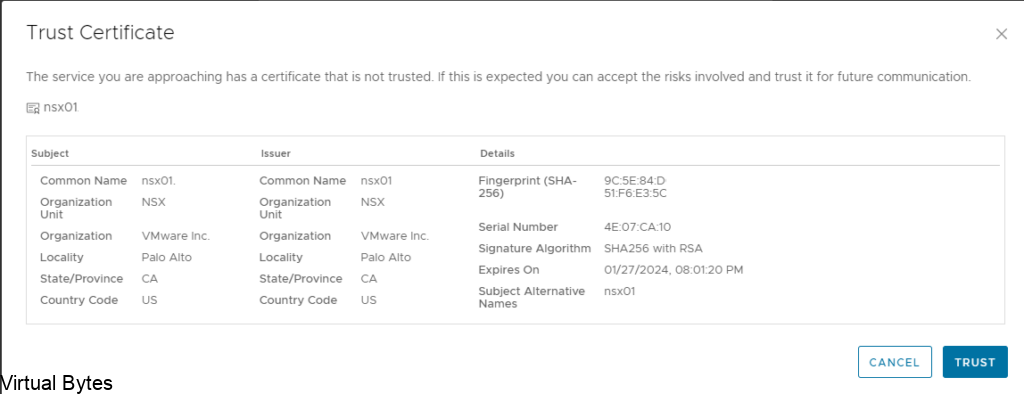

Once you are logged in, then you will want to create few things first! But my previous blog post already explains on how to add a vCenter Server and NSX-T integration here at this post.

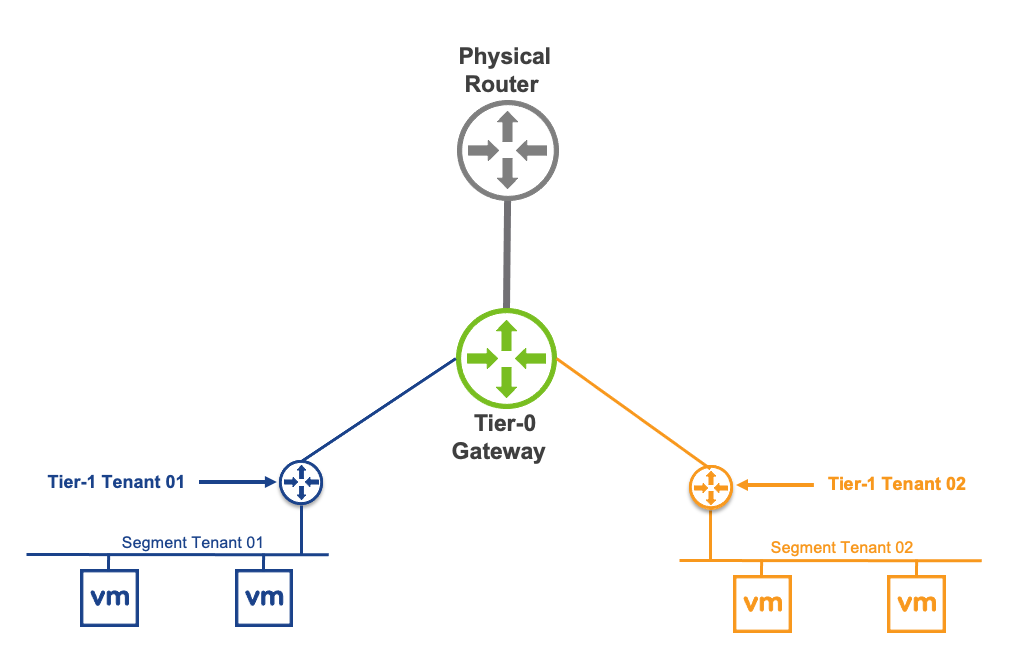

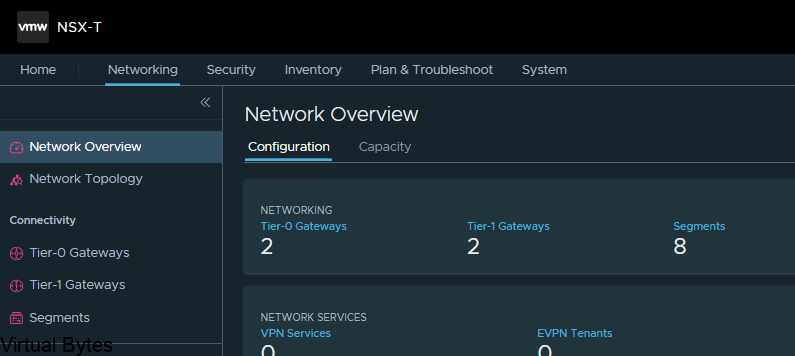

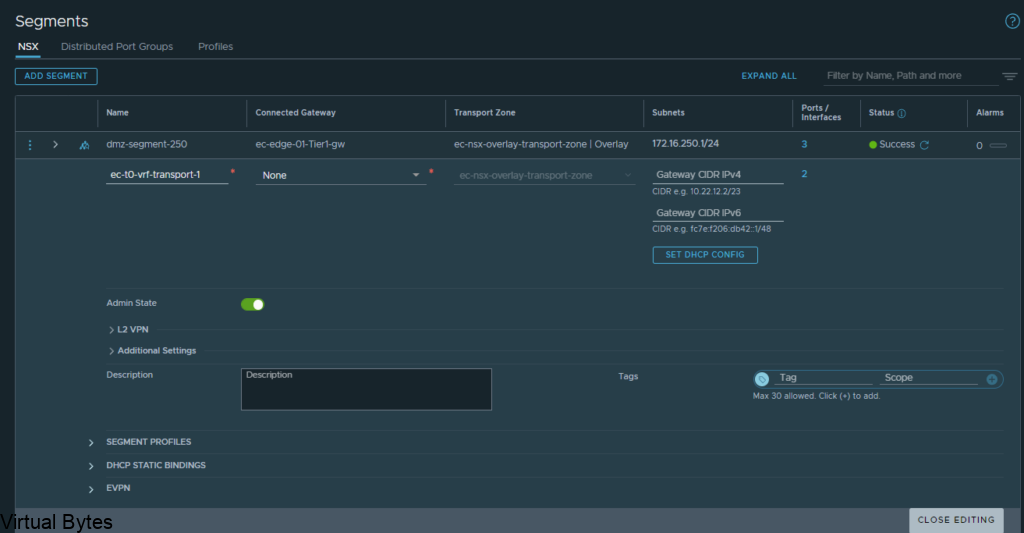

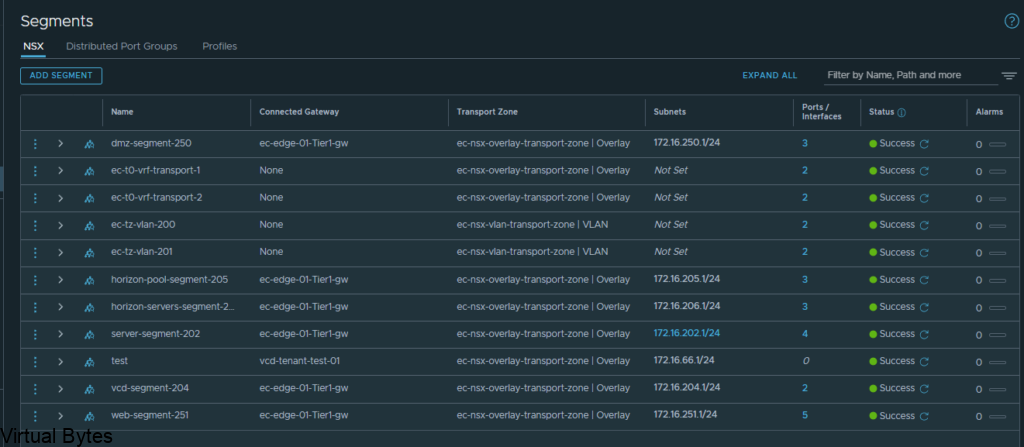

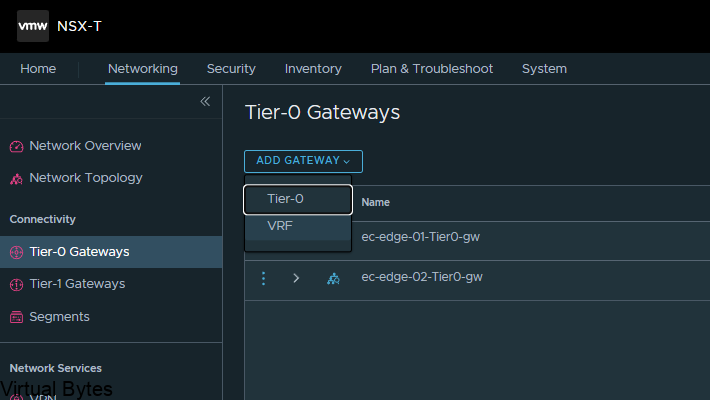

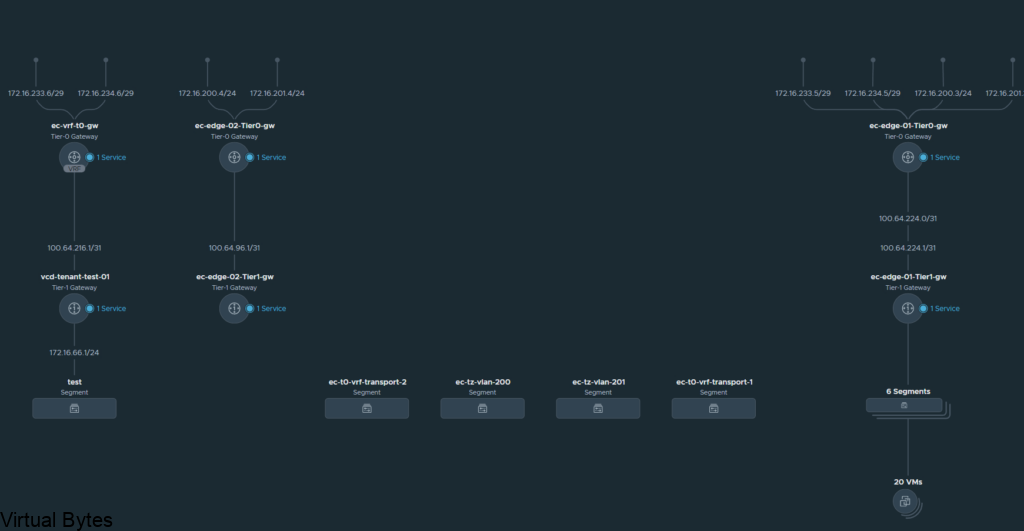

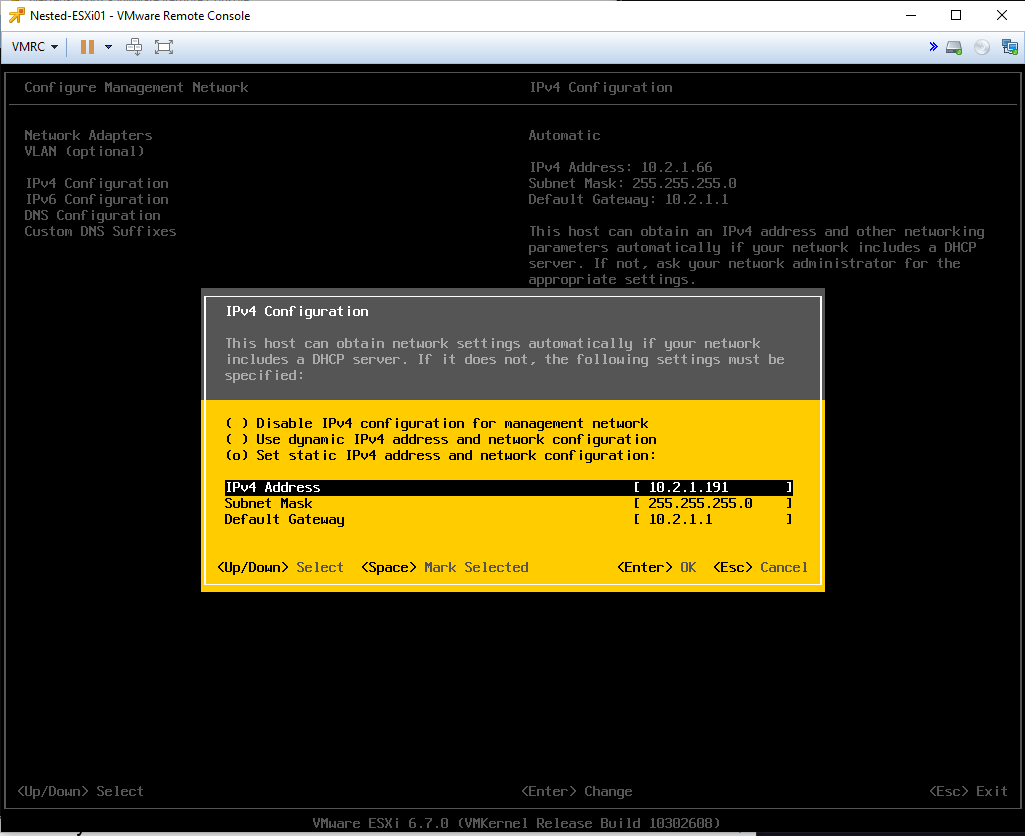

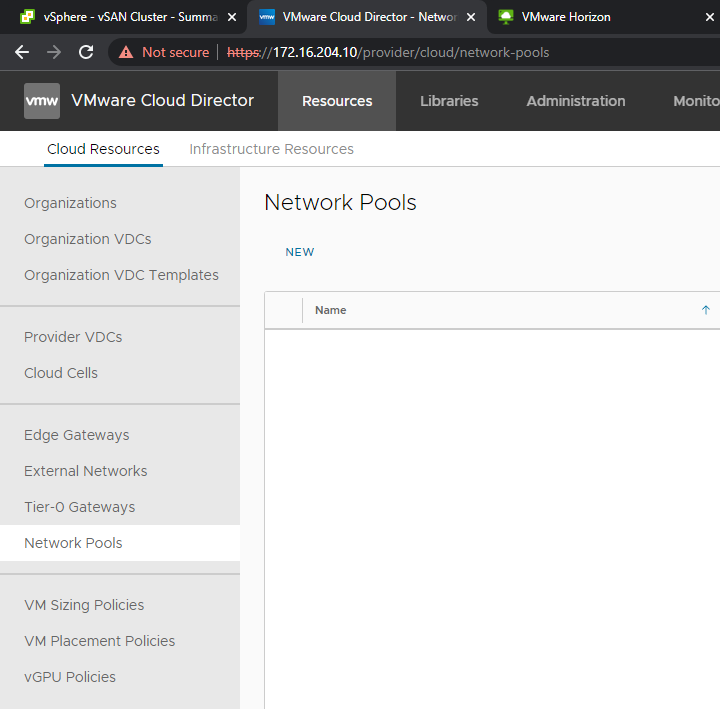

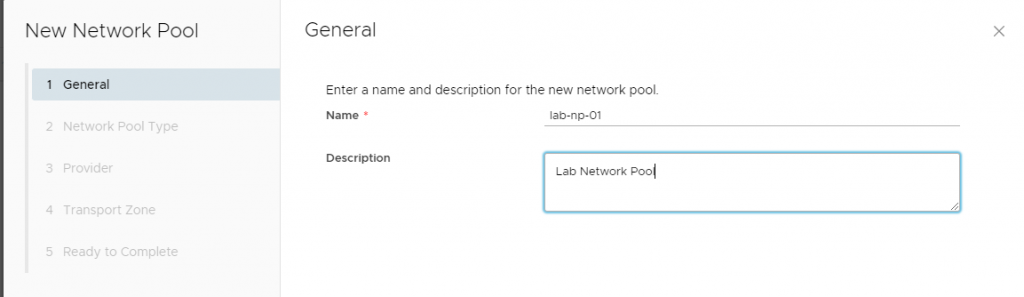

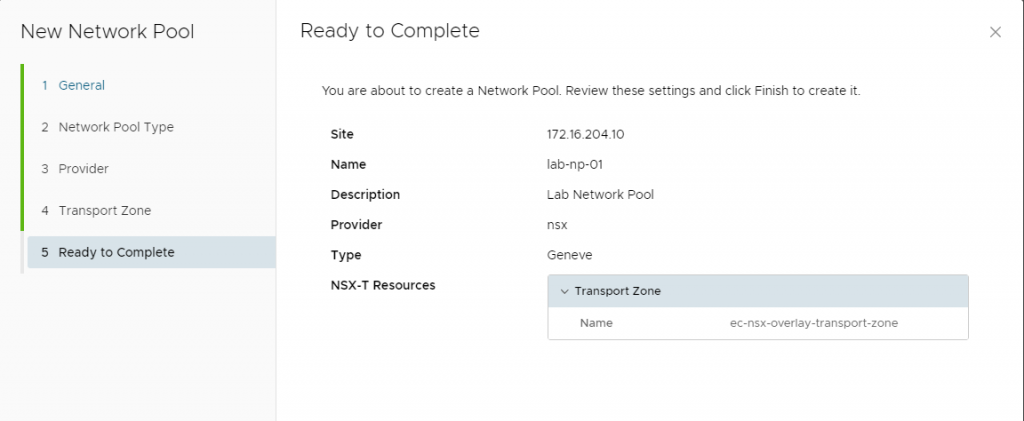

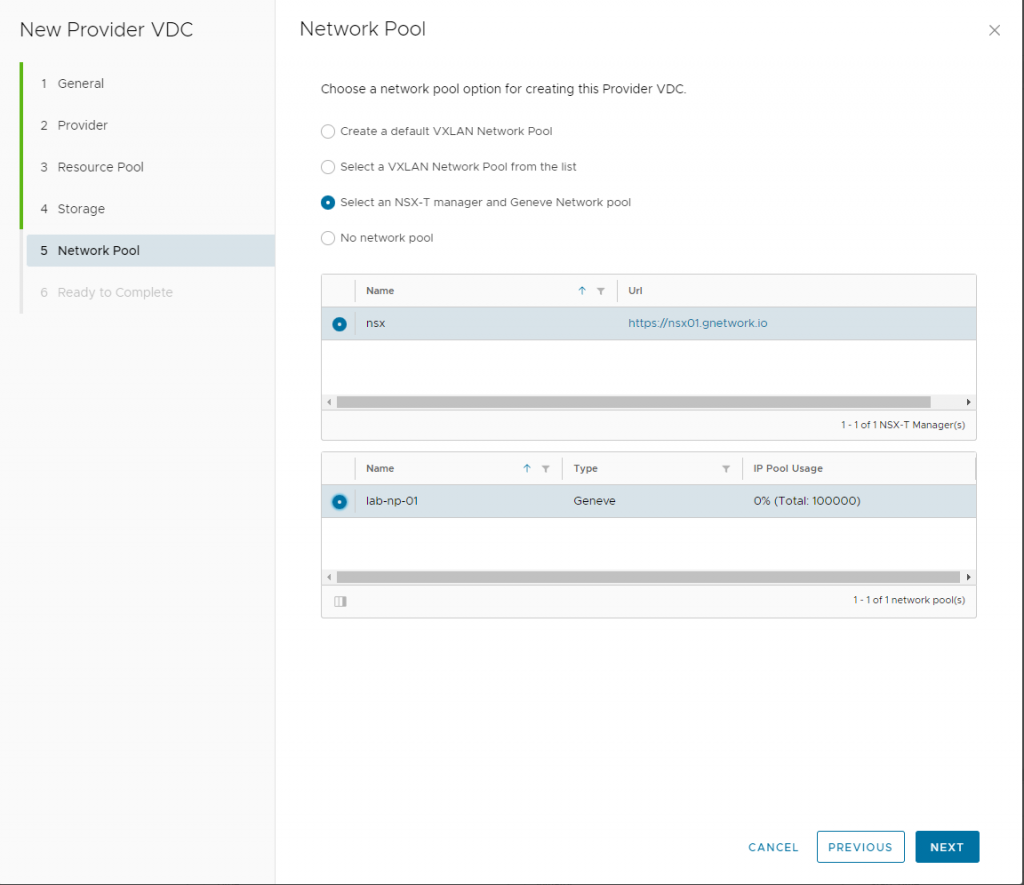

Well lets begin! First we will want to create a Network pool which is a VXLAN that will reside within the tenant environment will run ontop of Geneve on the overlay!

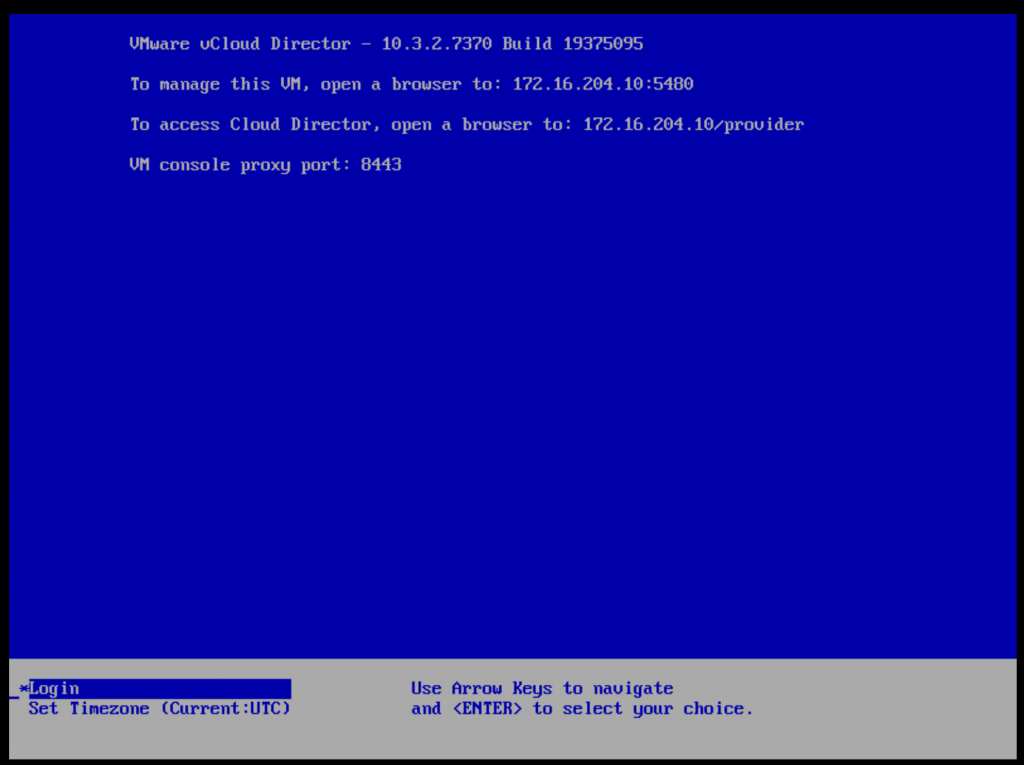

- Login into the Provider portal of VCD with the administrator account

- https://<vcd-ip>/provider/

Go to Network Pools

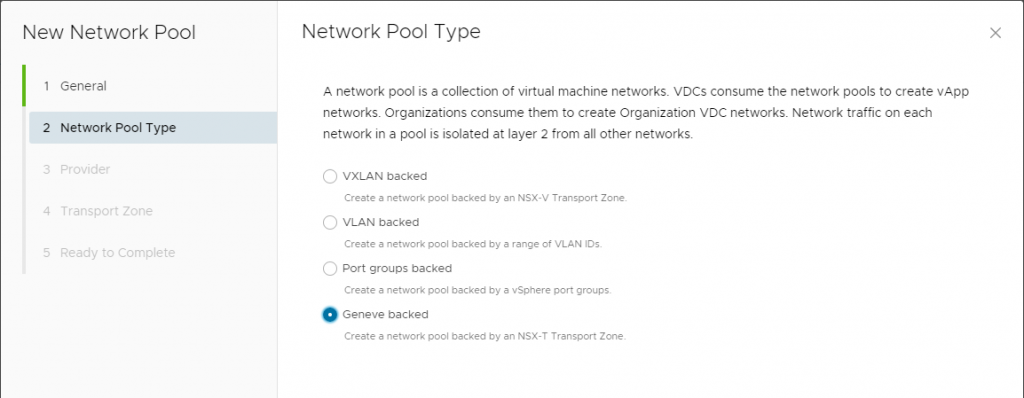

The network will be Geneve backed to ride the NSX-T overlay

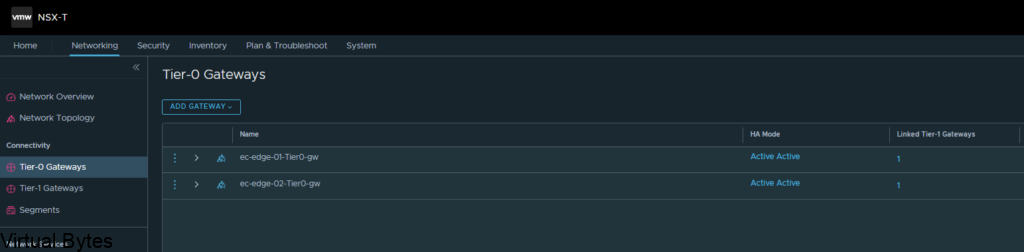

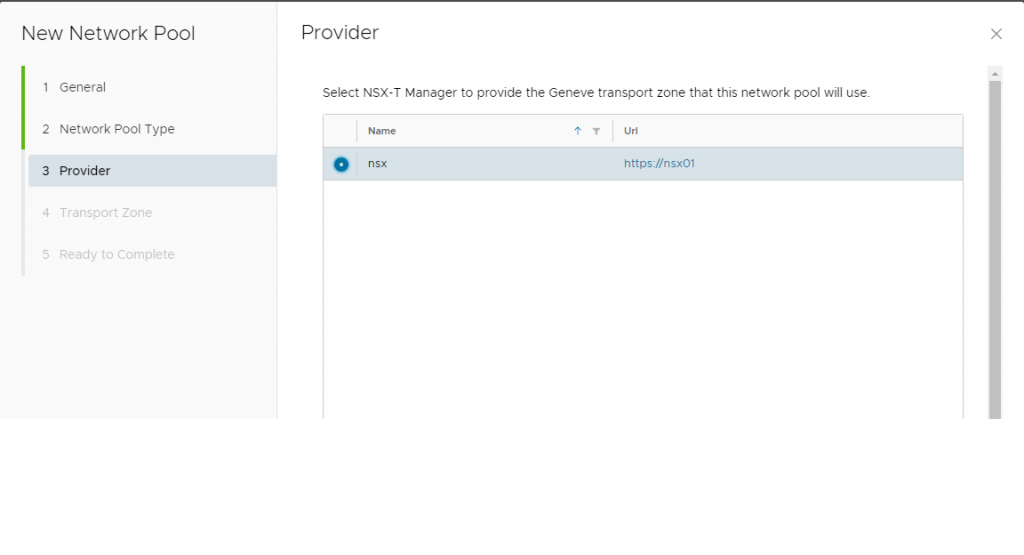

Select the NSX-T Manager

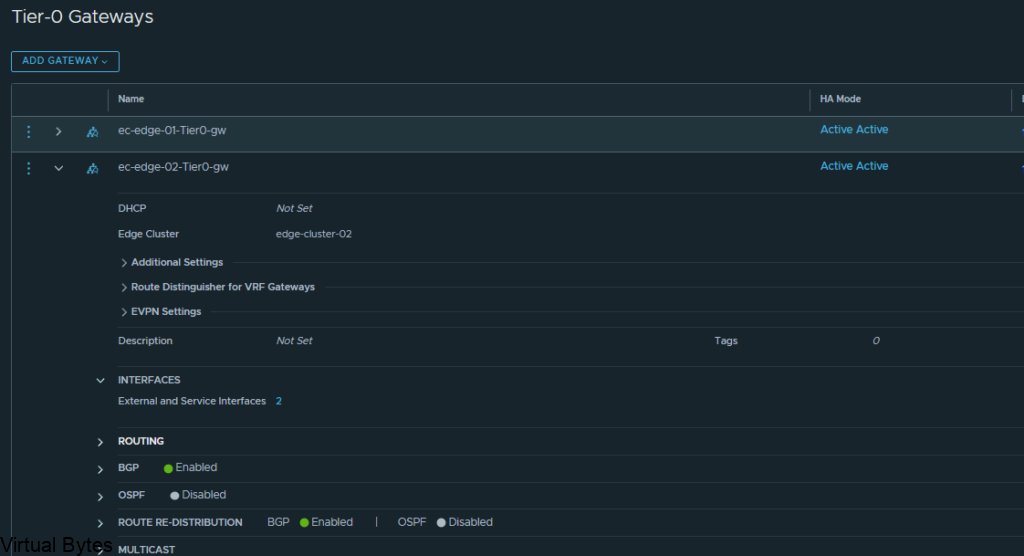

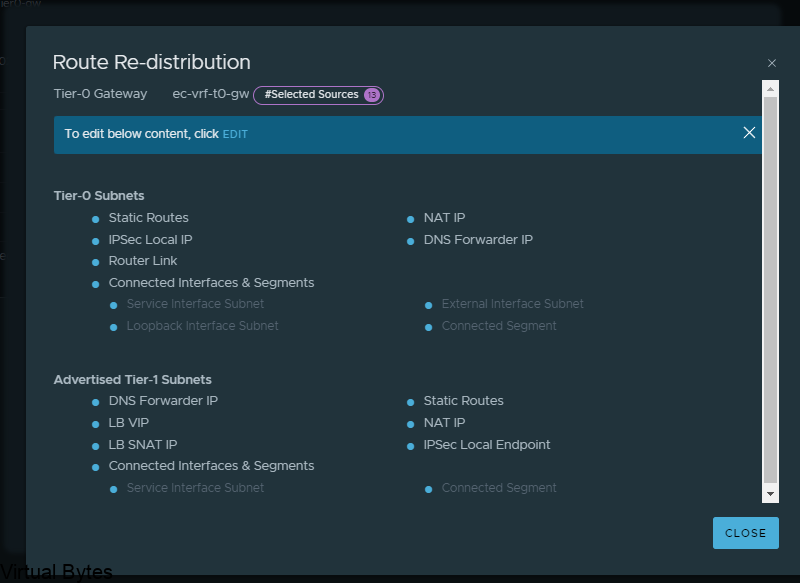

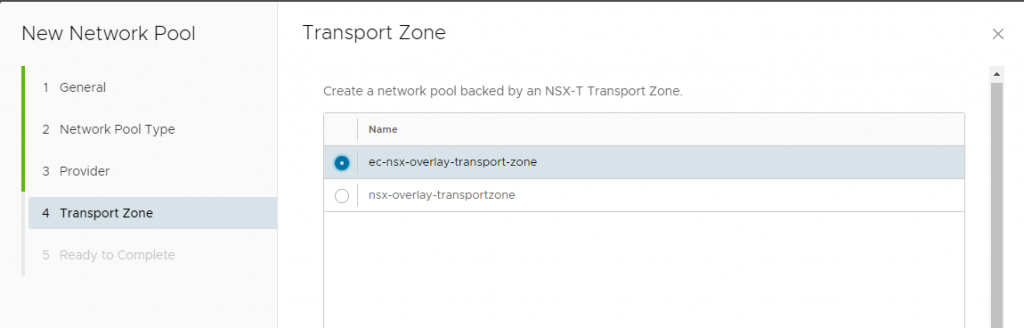

The network pool which is backed by NSX-T Transport Zone we will want to select the transport zone that you have created for your edge nodes during the NSX-T setup.

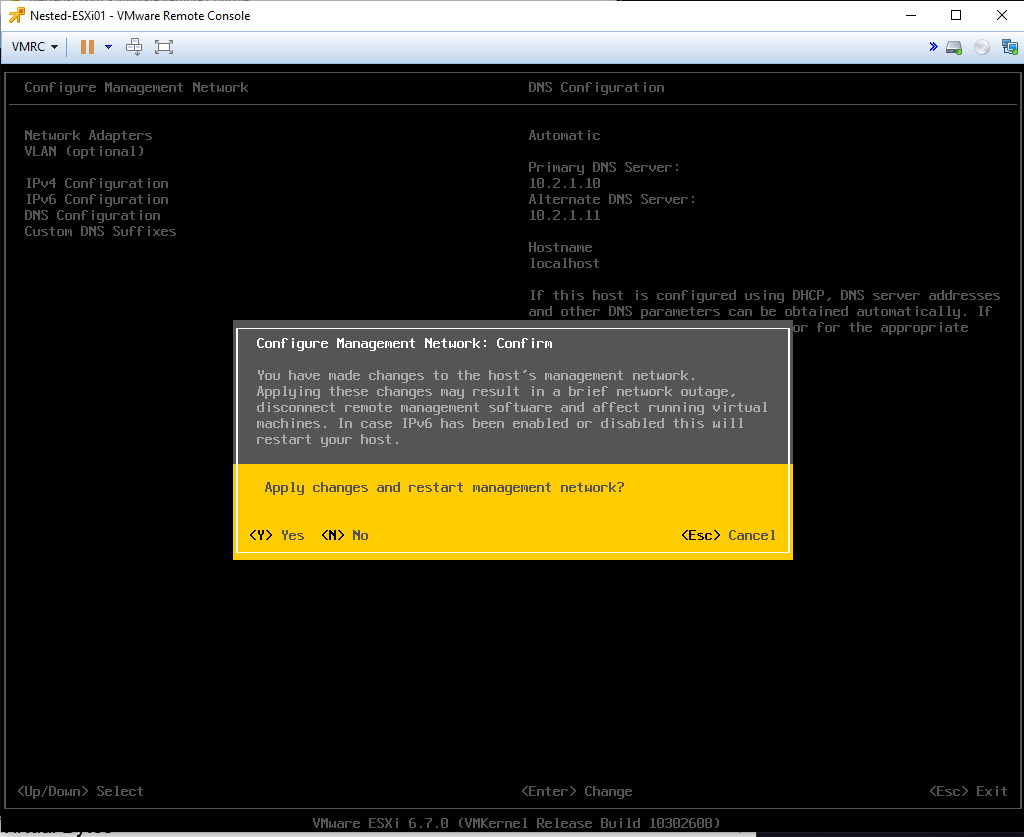

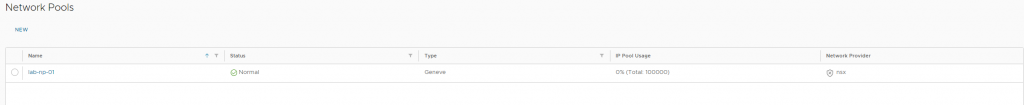

Once you have your Network Pool setup and followed the steps you should see something like this!

Network Pool has been successfully created as shown below

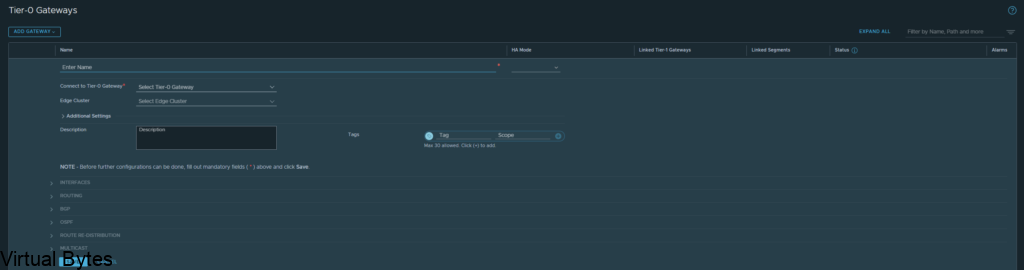

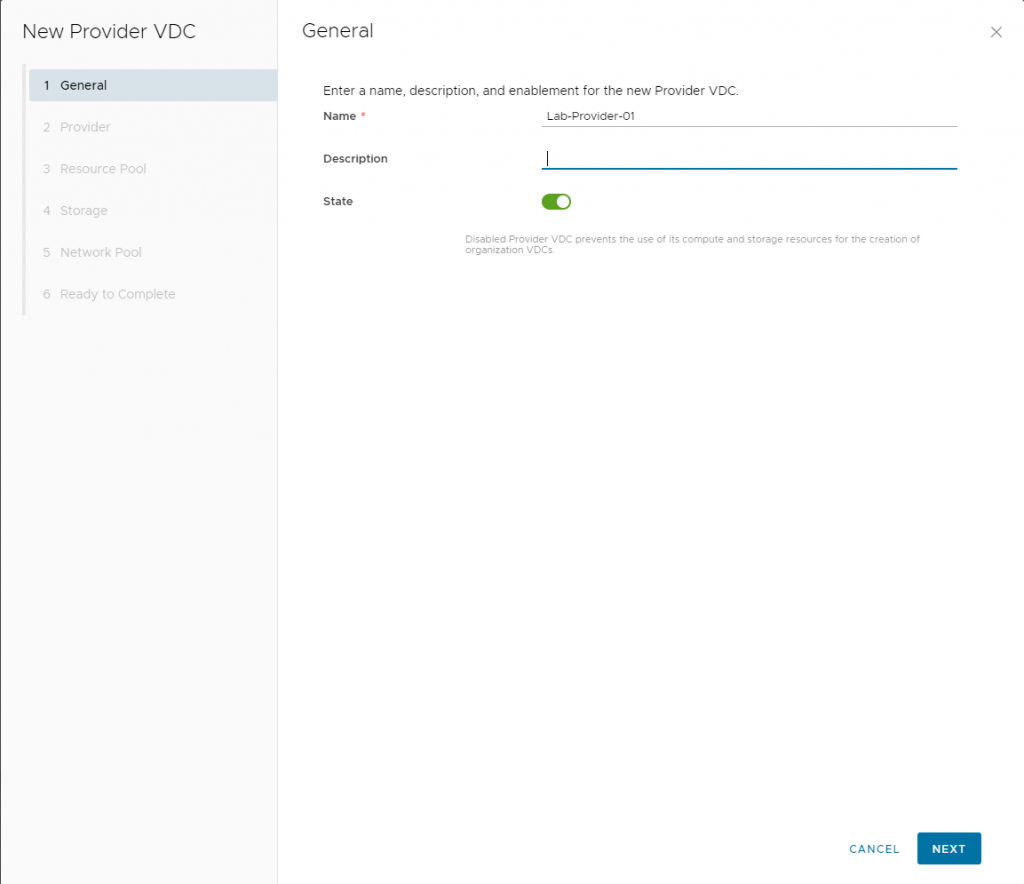

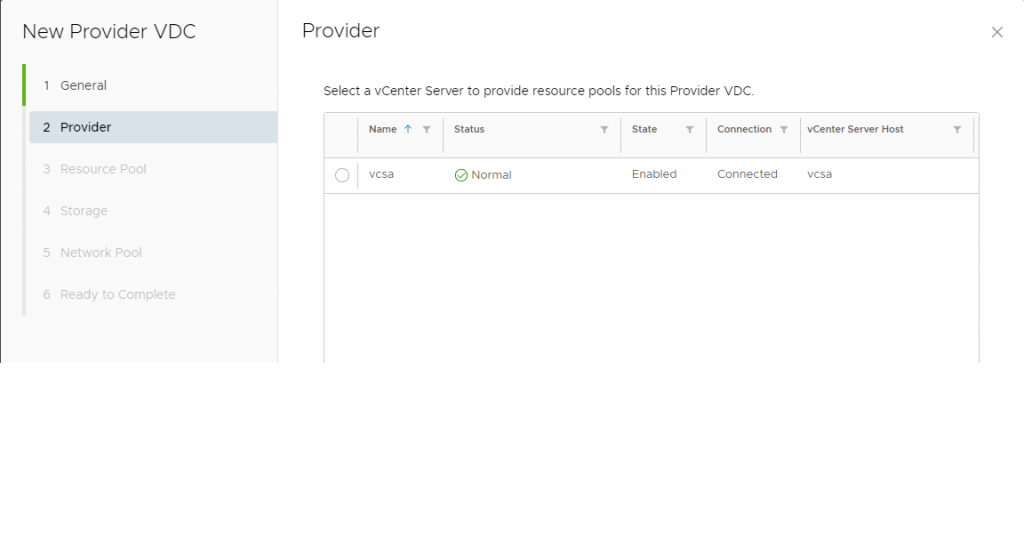

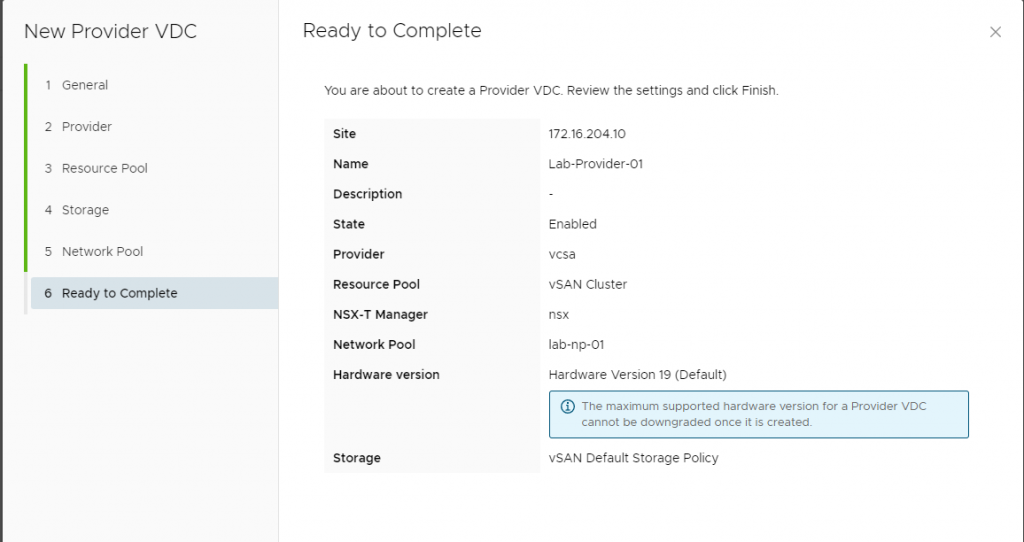

After a network pool has been created, next we will create the Provider VDC ( Virtual Data Center)

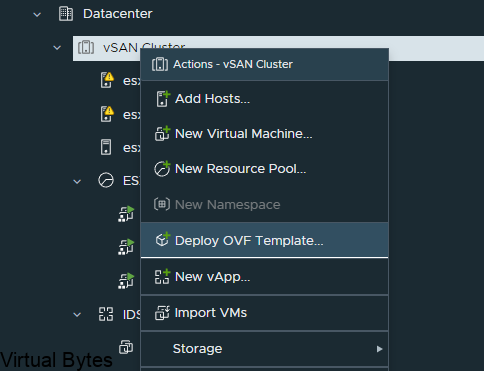

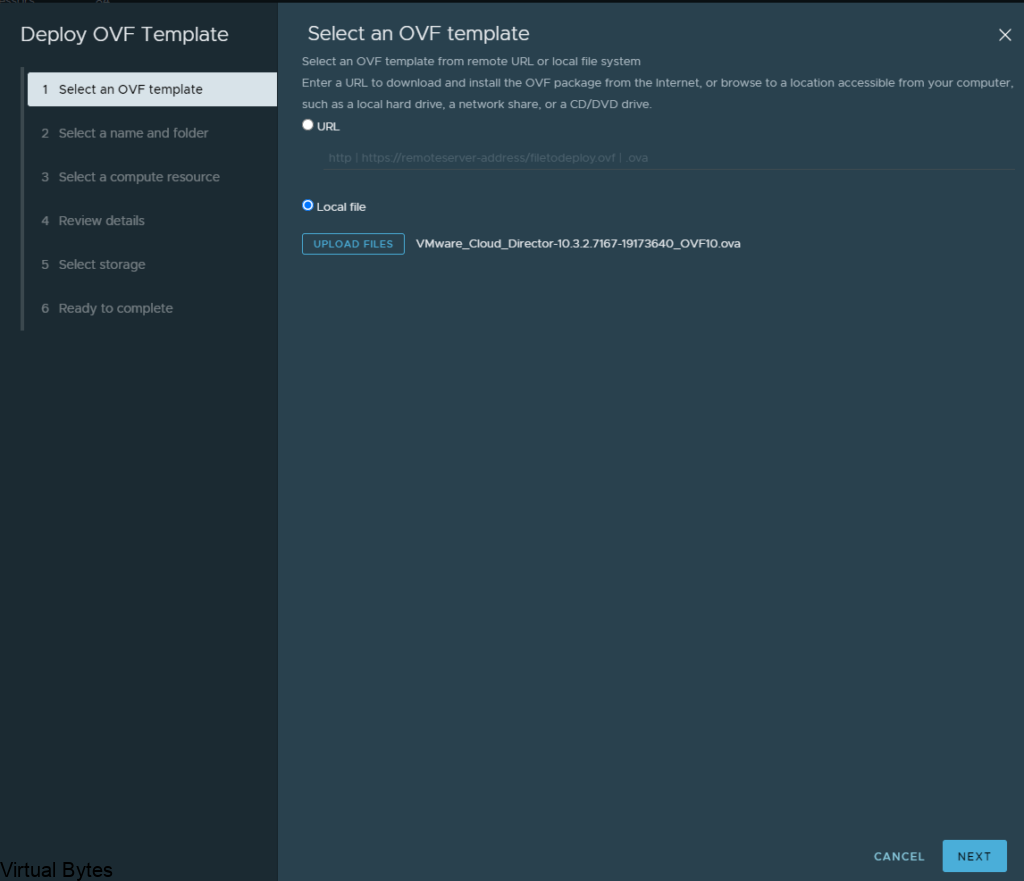

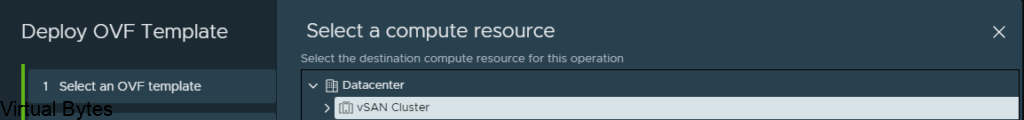

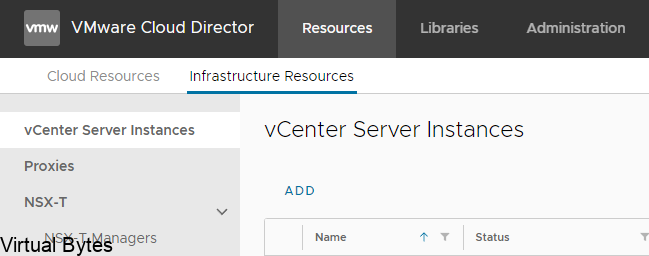

Select the Provider vCenter you have configured within the Infrastructure portion

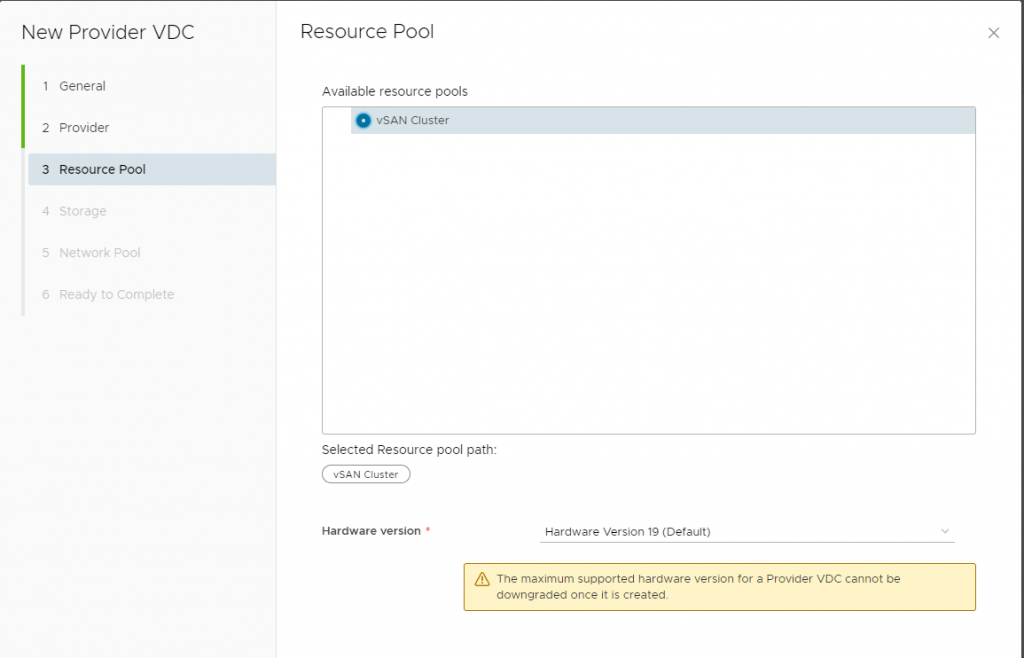

Select the Cluster, for me – I have a vSAN Cluster

Once you select the vSAN or Cluster you have in your envirnonemnt, you may proceed but the Hardware Version should be left as default since this is the maximum hardware version VCD can run and accept.

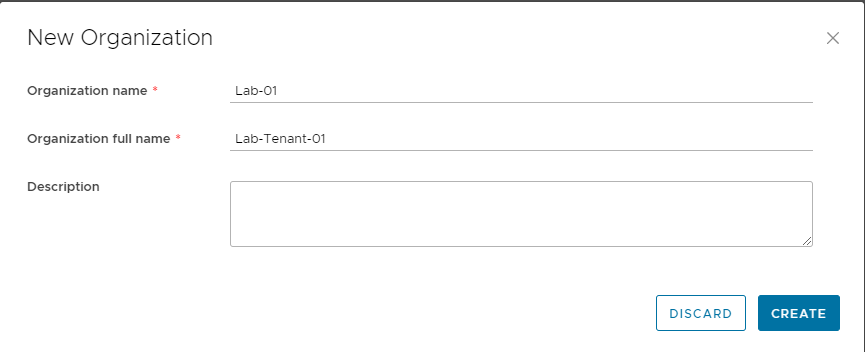

- Next, we will create an organization for us to be able to attach a VDC to

it, which for this walk through my org is Lab-01. That will be the same name

you use when you login as a tenant into VCD. - An organization is just a logical group of resources that are presented to customers, where each organization has its own isolation/security boundaries and their own Web UI which they can use an identity manager to integrate such as LDAP for seamless user management.

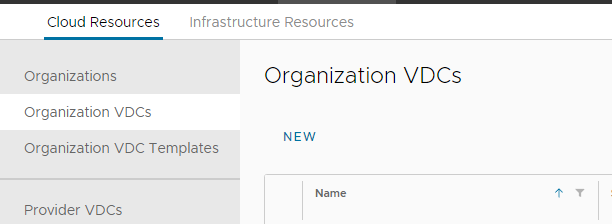

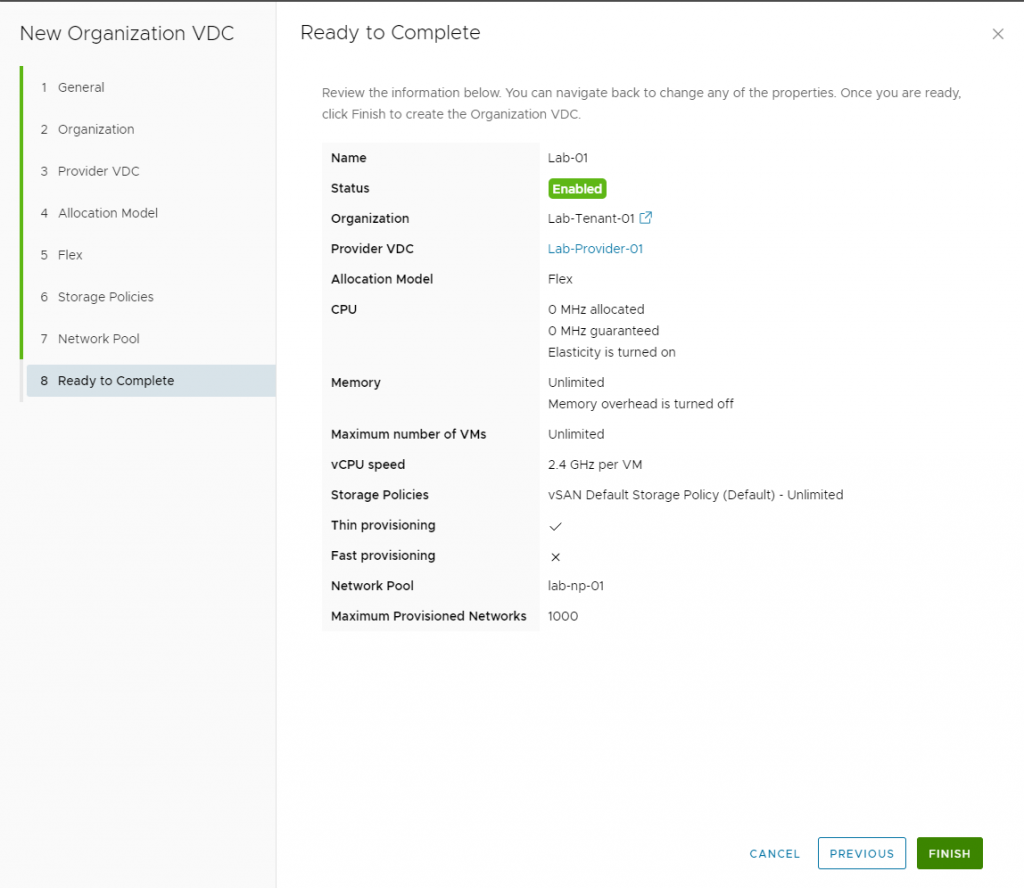

Once a New Organization has been created, next we will create a Organization VDC (Virtual Data Center)

Click on Organizations VDCs and Create “NEW” Organization

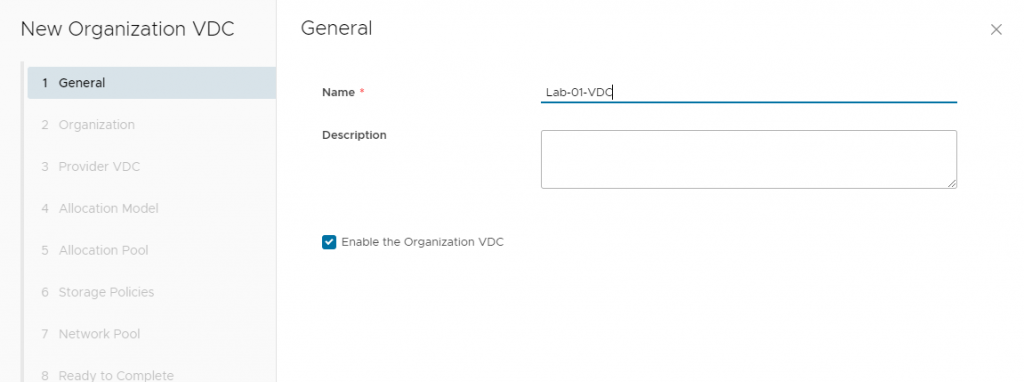

Type a name of the organization you wish to create

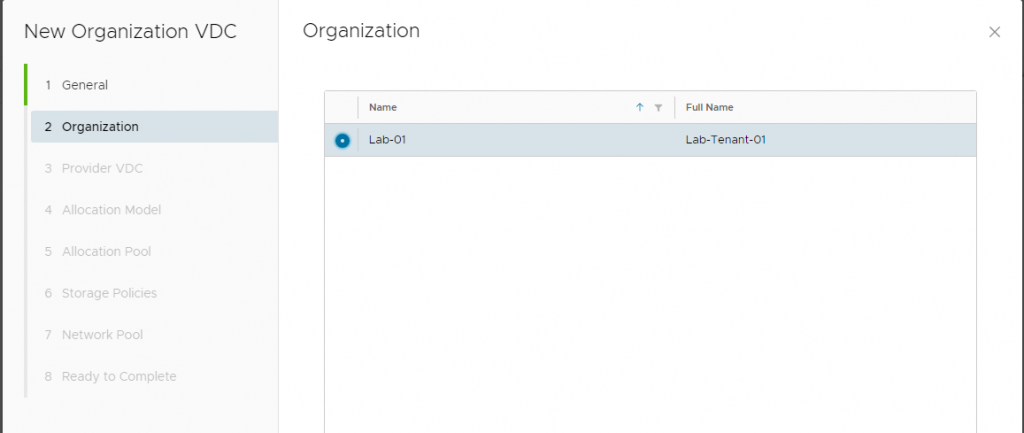

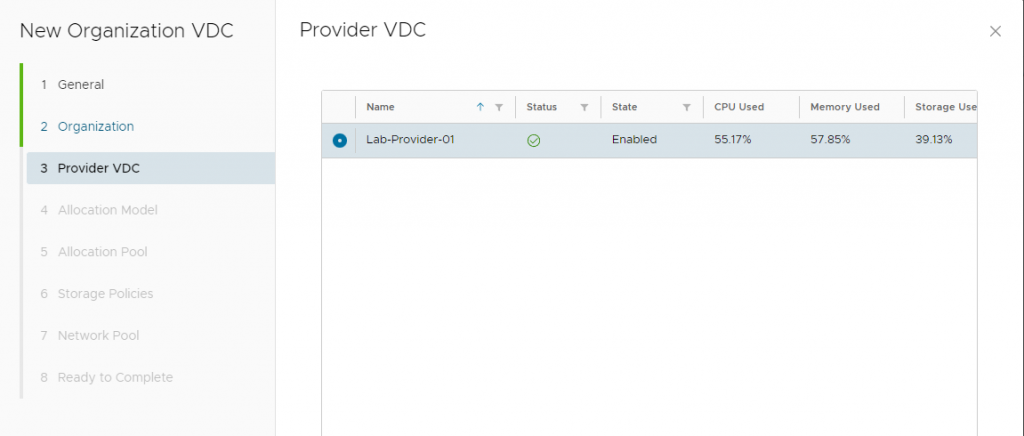

Attach that organization to the provider virtual datacenter we created earlier

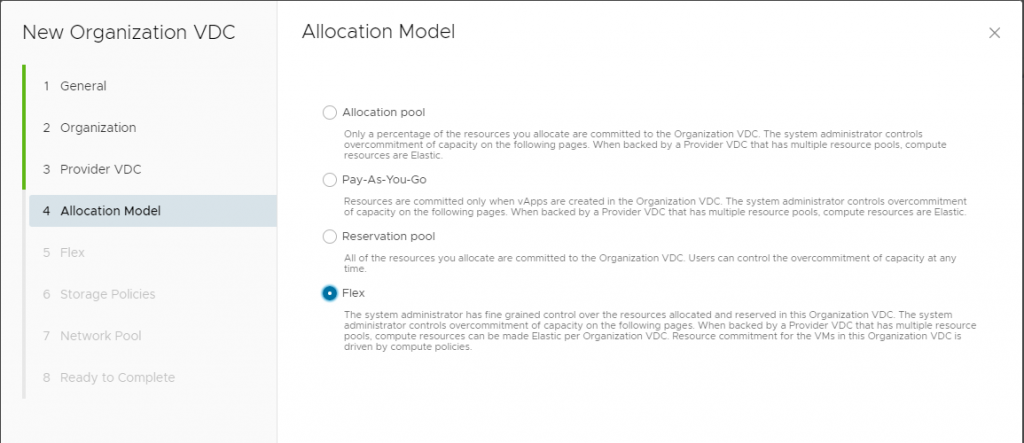

Select the allocaiton model, I have seen the Flex model be the most flexible to have the ability to have better control over the resources even at the VM level. More information is here on VMware’s website

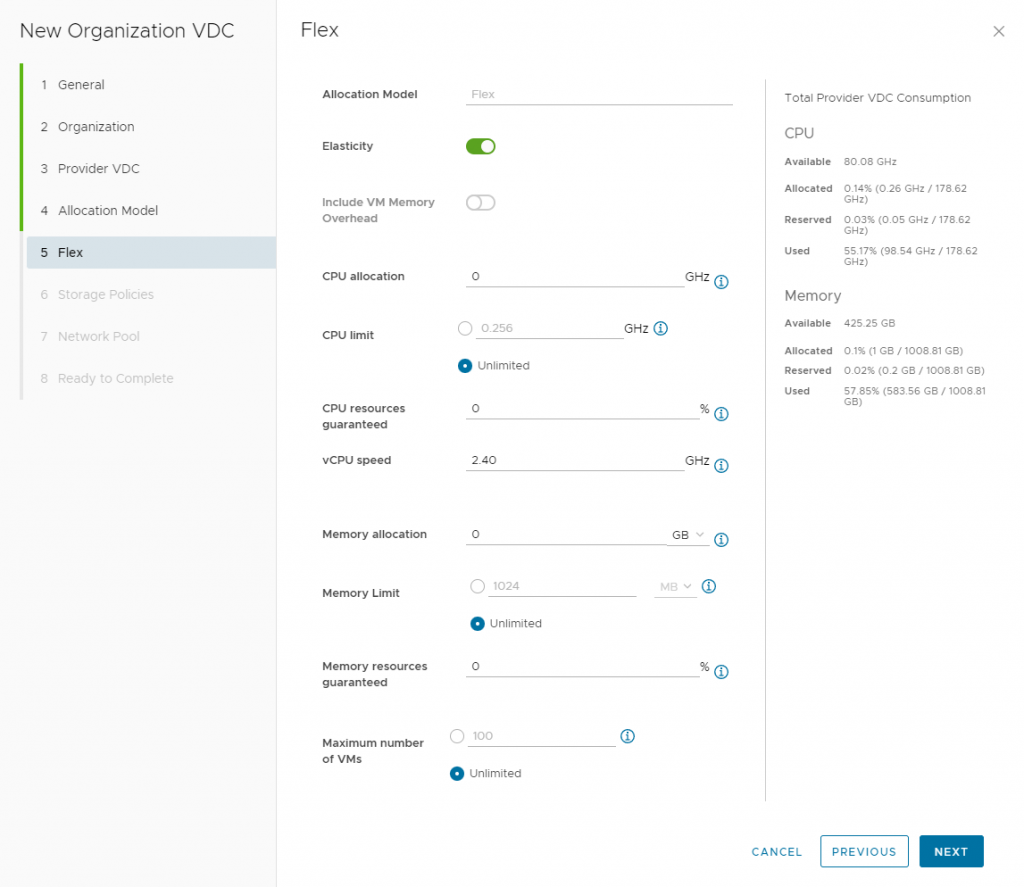

For this demonstration, I am not allocating and resource I am giving my Tenant unlimited resources from my vSAN Cluster, but for a production environment you will want to use the proper allocation model and resources.

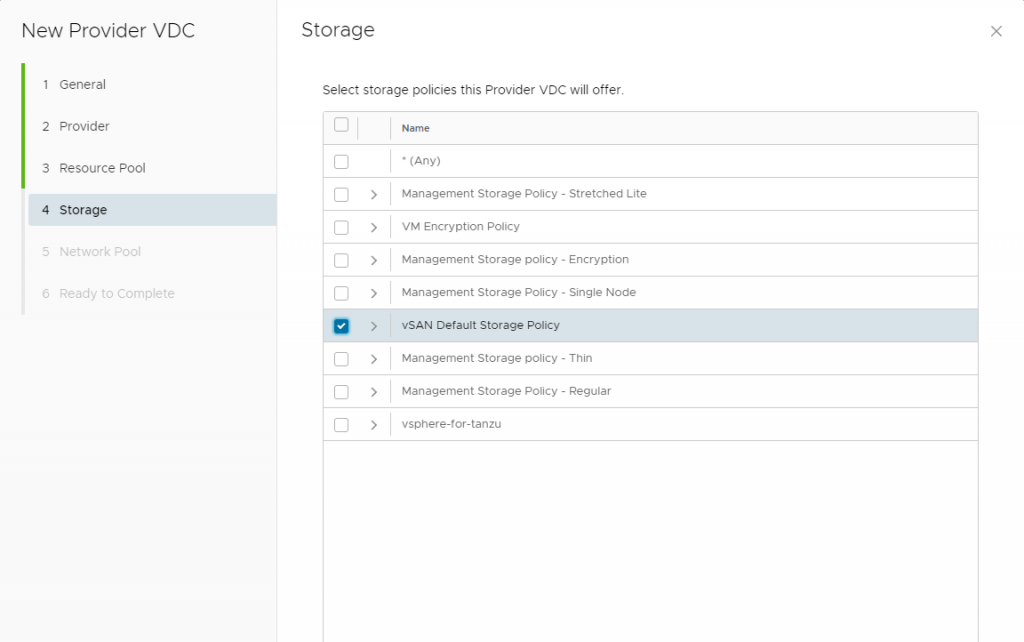

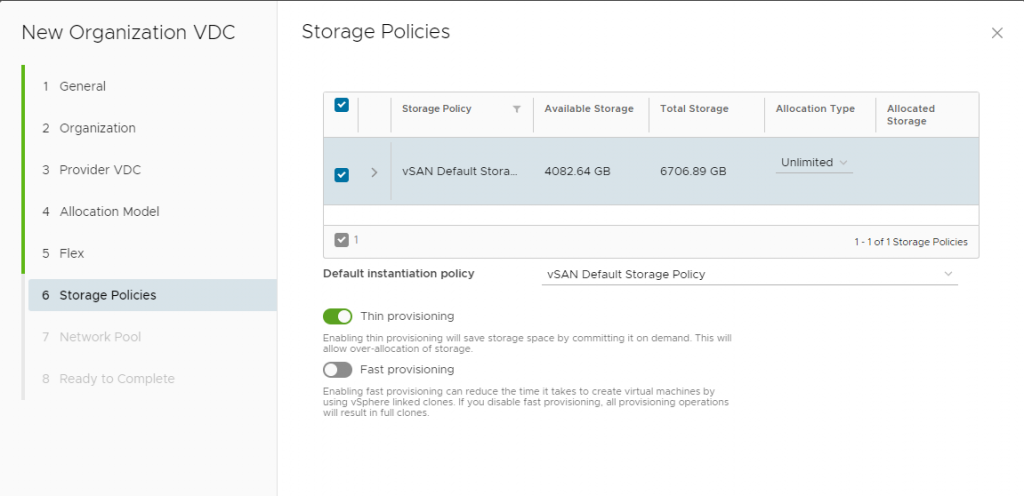

Select the Storage policy along with i like to enable Thin provision to save storage space!

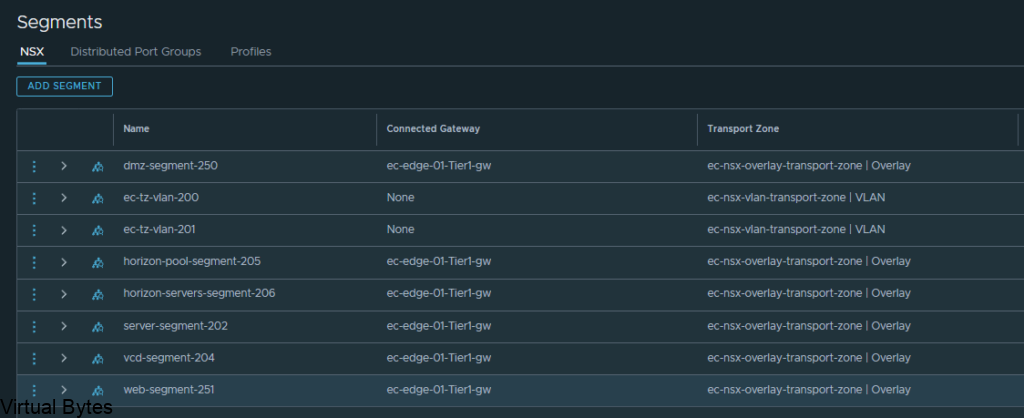

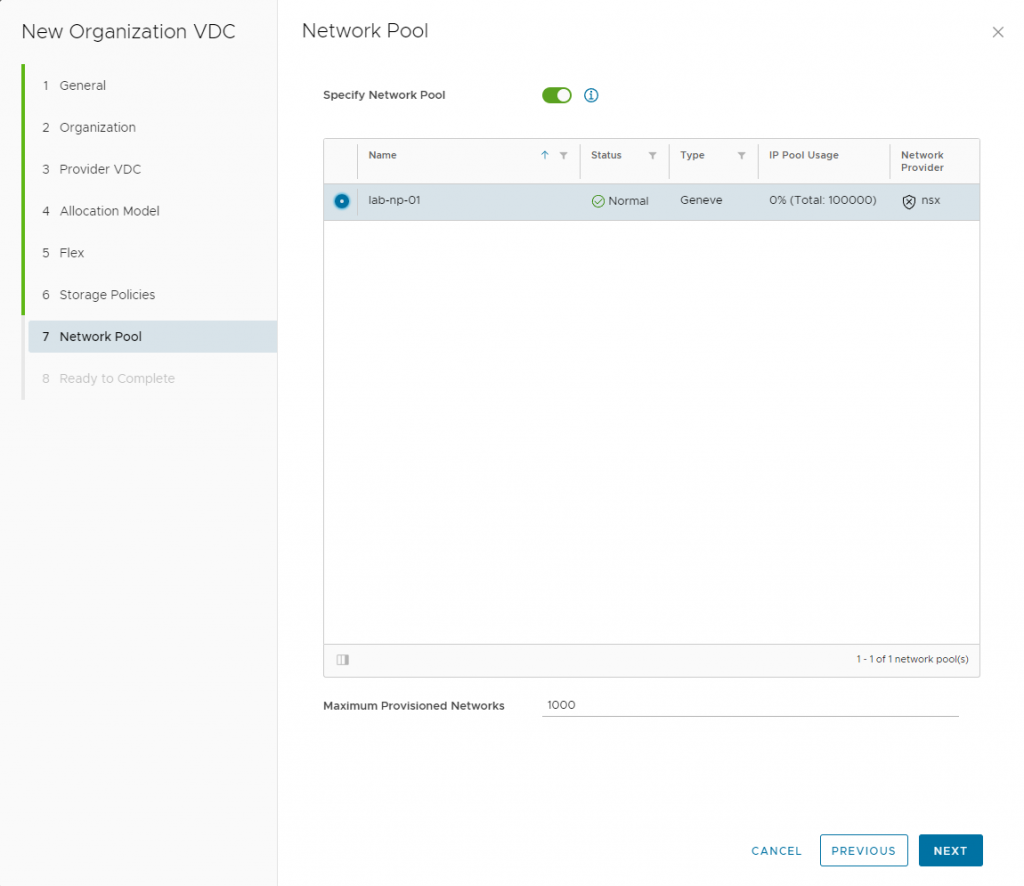

Each organization will have its own Network Pool but it will run ontop of the Geneve overlay

About to finish up the setup of a VDC!

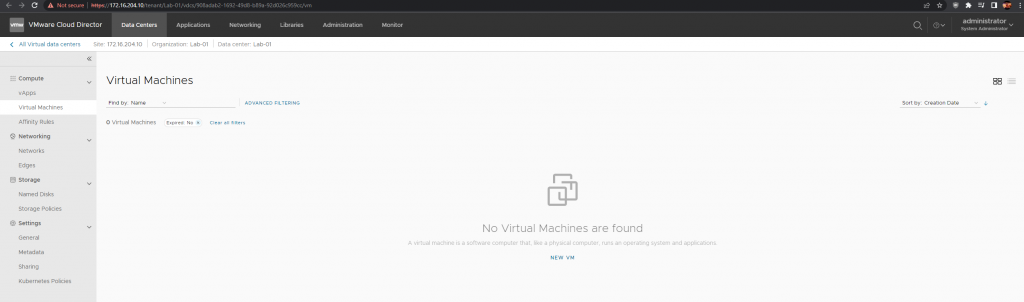

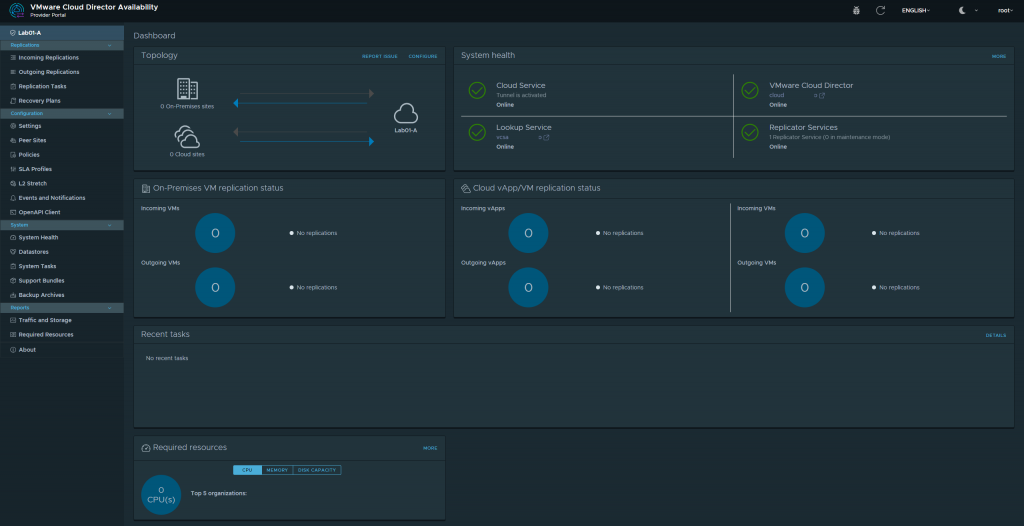

We have logged into the new Tenant space we have created! 🙂