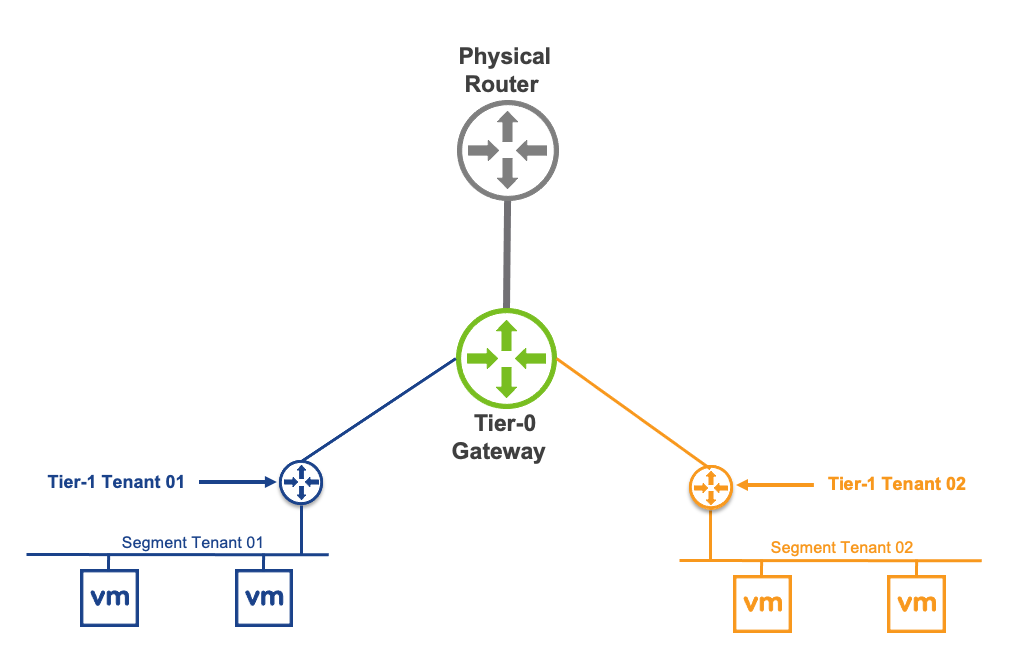

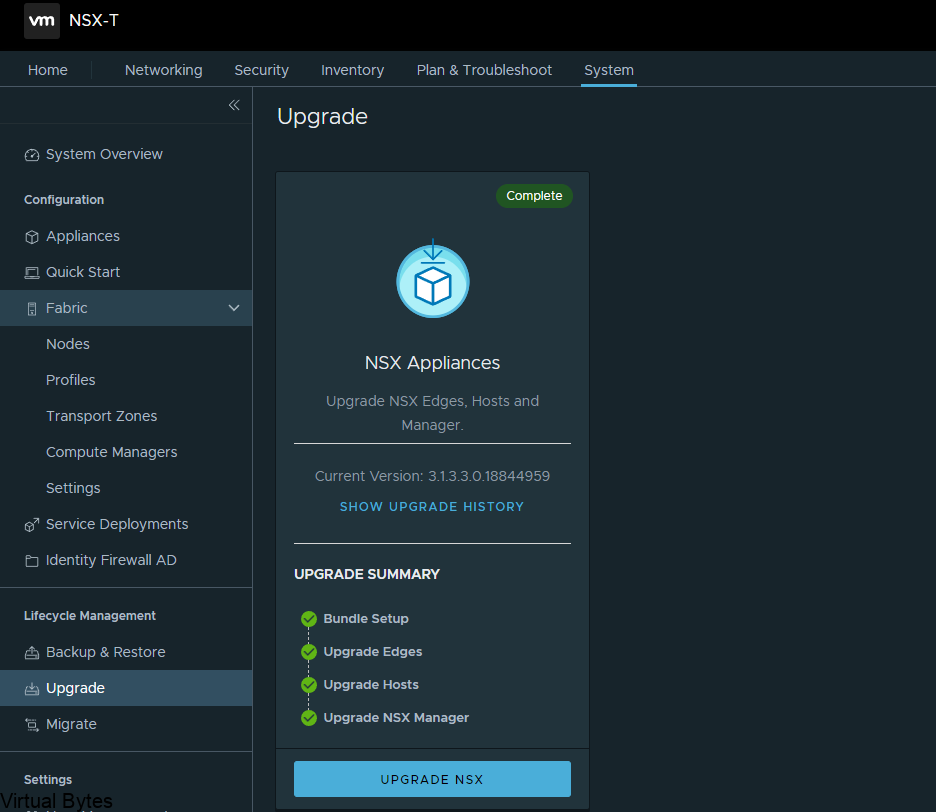

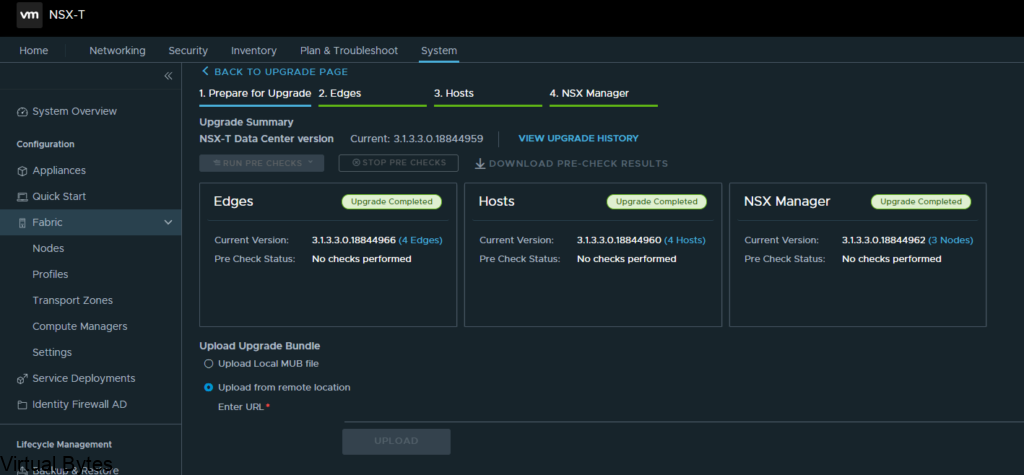

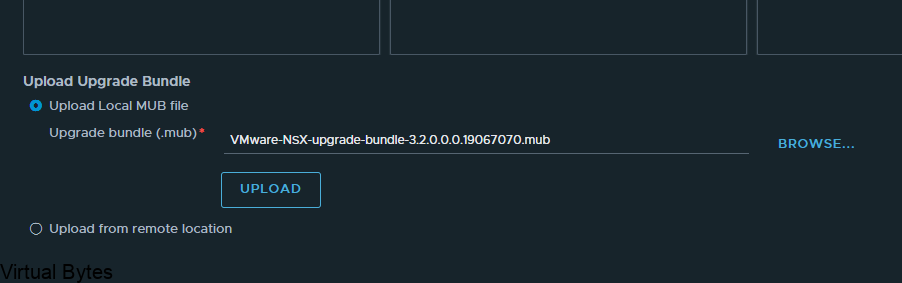

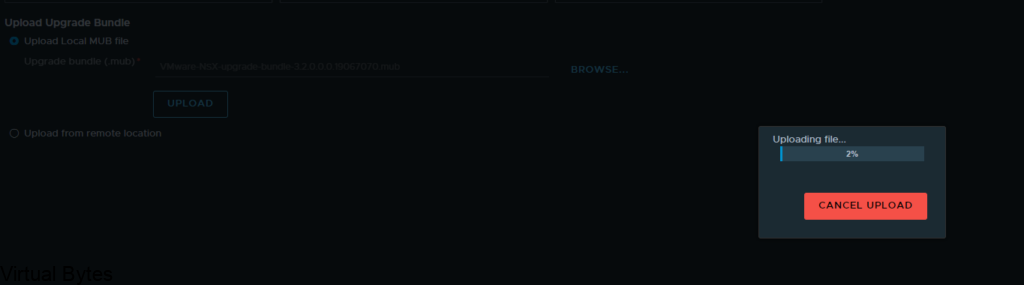

So where to begin? My goal I have is to become a VCIX within DCV and NV, but it will come soon! I have been passionate about learning and progressing my skill sets within VMware Solutions and creating complex environments, but with coming along with few folks at VMware which invited me into VMware NSX Ninja Program for NSX-T and VCF Architecture. As this Program is geared toward the Intermediate / Expert level it does challenge you but I have managed to succeed! I have finished Week 1 of 3, the VMware Certified Instructors are amazing they teach and walk-through real-world solutions which let you get a good understanding of the many bells and whistles that NSX-T and VCF can offer! As i go through the journey of the NSX Ninja, I will be adding more great content to this post! Stay Tuned 🙂

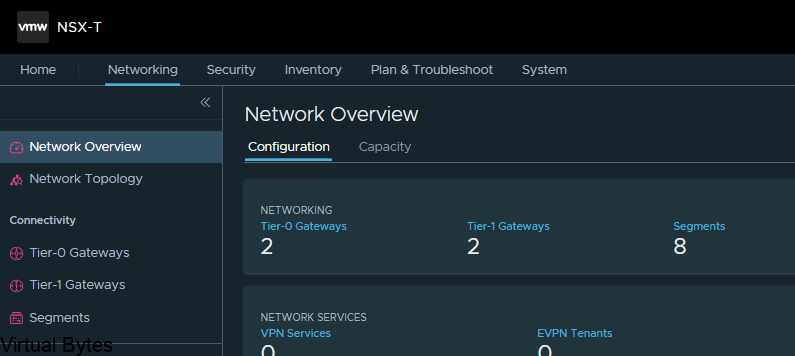

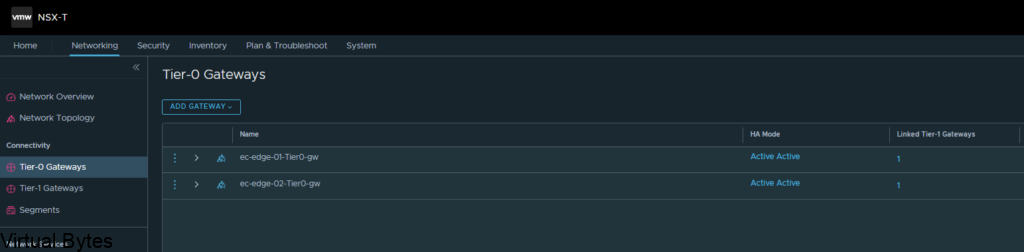

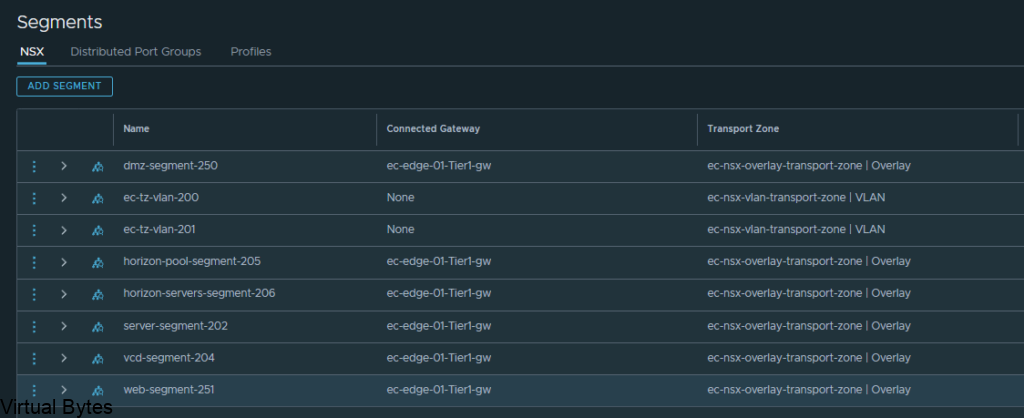

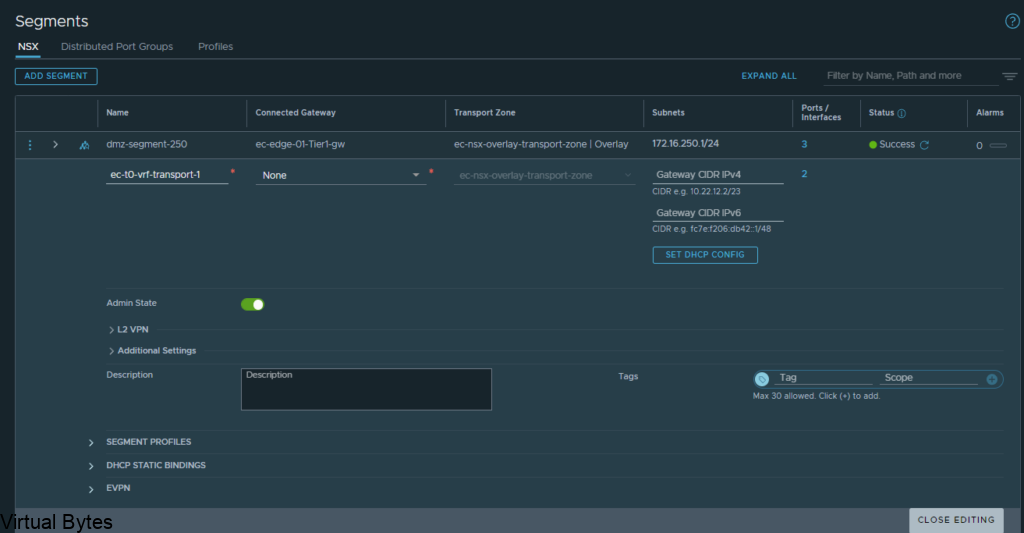

NSX Ninja Week 1 – Overview