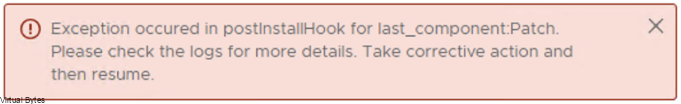

Have you recently attempted to upgrade your vCenter to version 8.0.2.00300 from 8.0.2.00100, only to be met with an unexpected roadblock in the form of VMware vCenter High Availability (vCHA)? Well, you’re not alone! In this blog post, we’ll dive into the common pitfalls and challenges that users face when trying to upgrade vCenter with vCHA enabled. We’ll discuss the potential causes of the failure, troubleshooting tips, and possible solutions to get your upgrade back on track.

What is vCHA?

vCenter High Availability (vCenter HA) protects vCenter Server against host and hardware failures. The active-passive architecture of the solution can also help you reduce downtime significantly when you patch vCenter Server

For this vCenter Release, there has been many other issues that end-users have seen from issues where time zone, or local host dns. But, for my issue it was vCHA!

- Time zone not being set to ETC/UTC within VAMI or CLI

- Local Host and DNS Resolution within /etc/hosts file

- vCenter Server High Availability Service enabled but not configured – This was my issue!

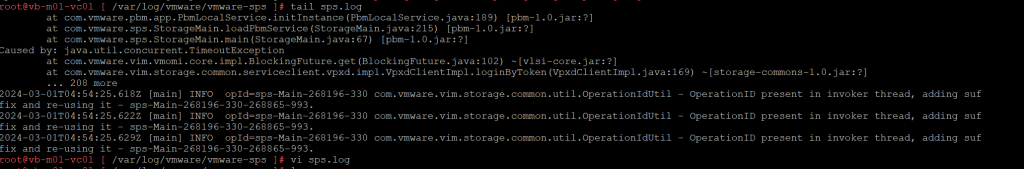

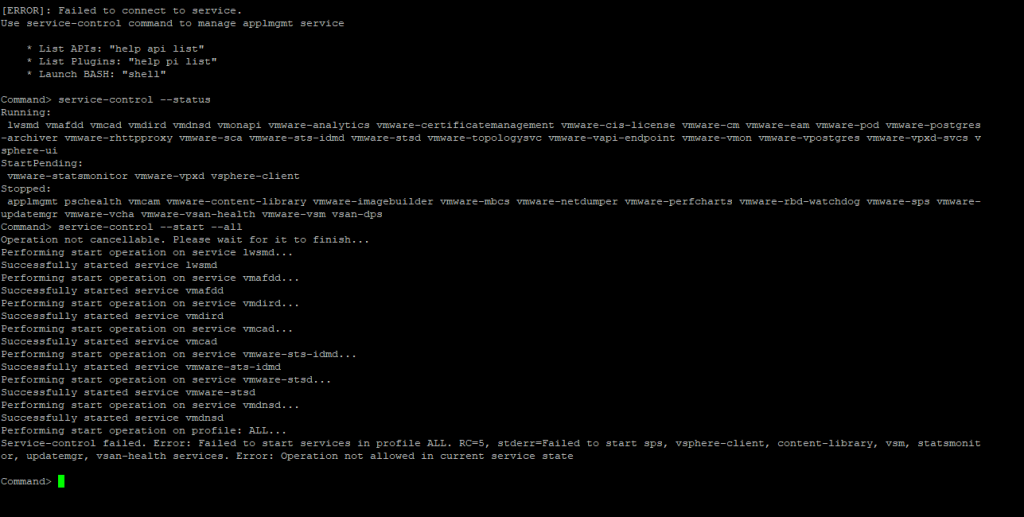

Performing start operation on profile: ALL Service-control failed. Error: Failed to start services in profile ALL. RC=2, stderr=Failed to start vcha services. Error: Service crashed while starting.

The Cause – vCHA was set to Automatic Start Up and Not Configured

The Fix –

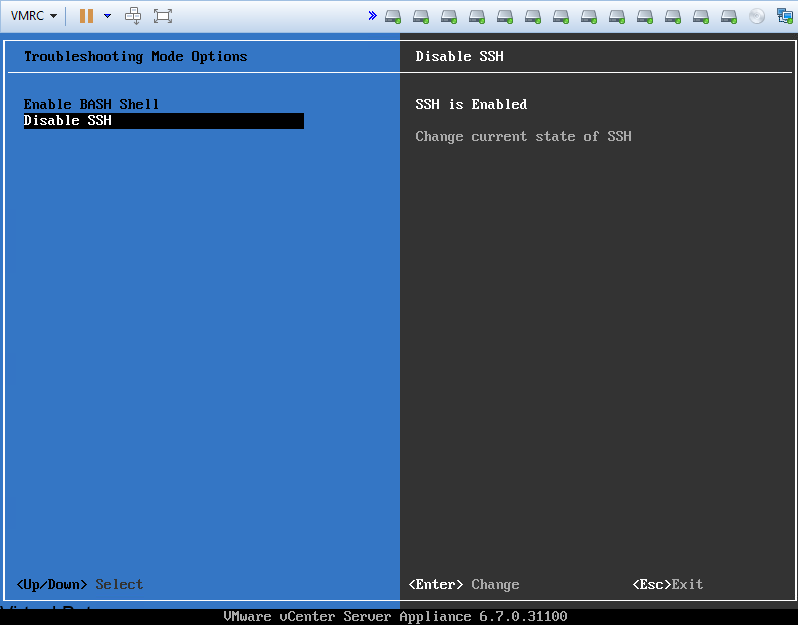

Either GUI or CLI can be utilized to fix.

- Take a fresh snapshot

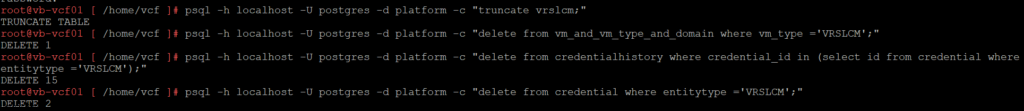

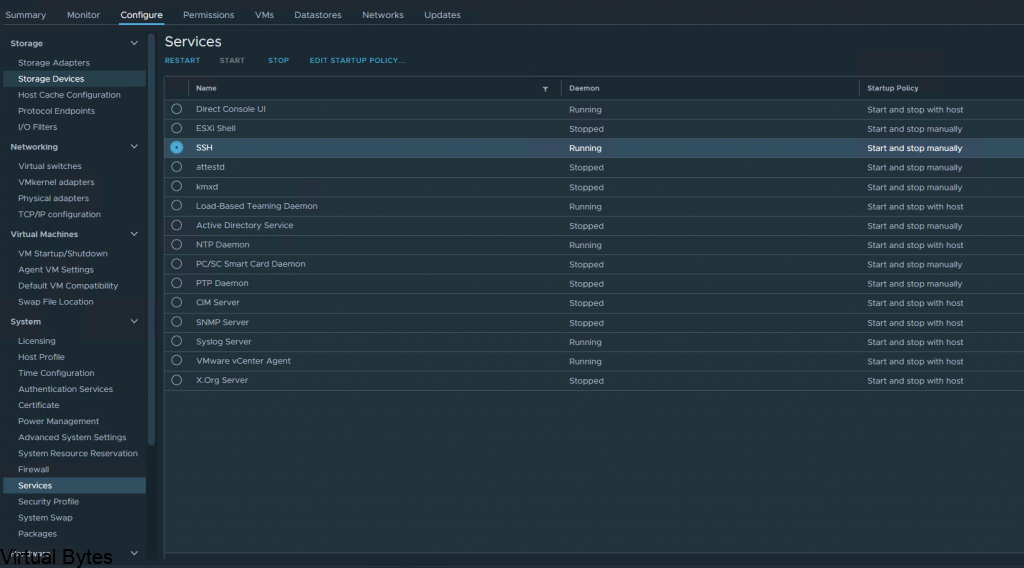

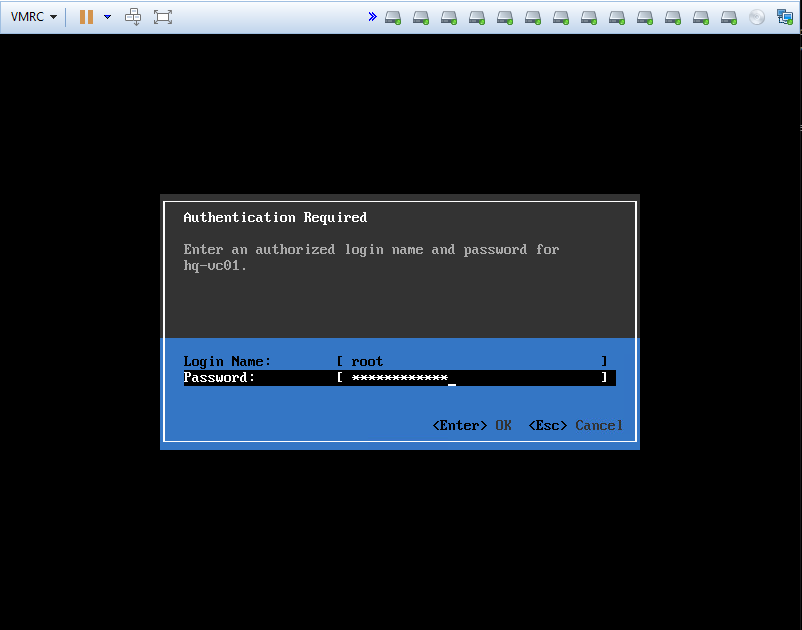

- SSH into vCenter, if you are running VCF, then you will need to lookup your password with VCF Lookup_password Service:

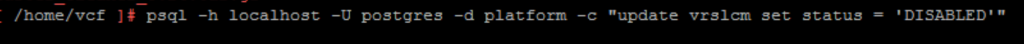

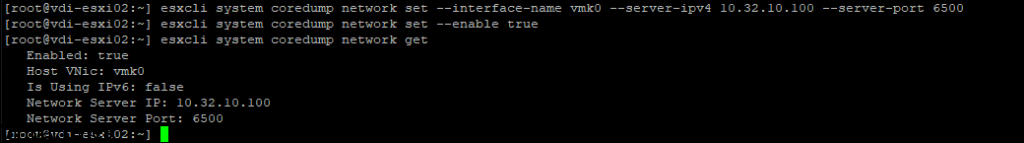

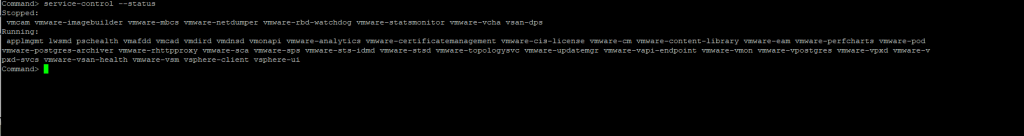

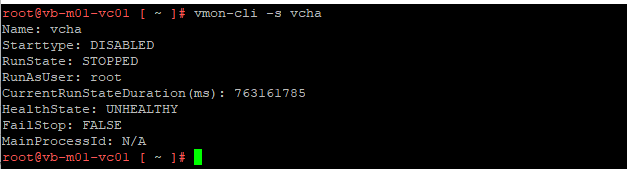

- Execute Command to see if the vCHA service is running –

Command Line

vmon-cli -s vcha

vmon-cli -S DISABLED -U vcha

After service is stopped, try to resume patch or revert snapshot and redo the steps above to ensure that the vCHA service is disabled.

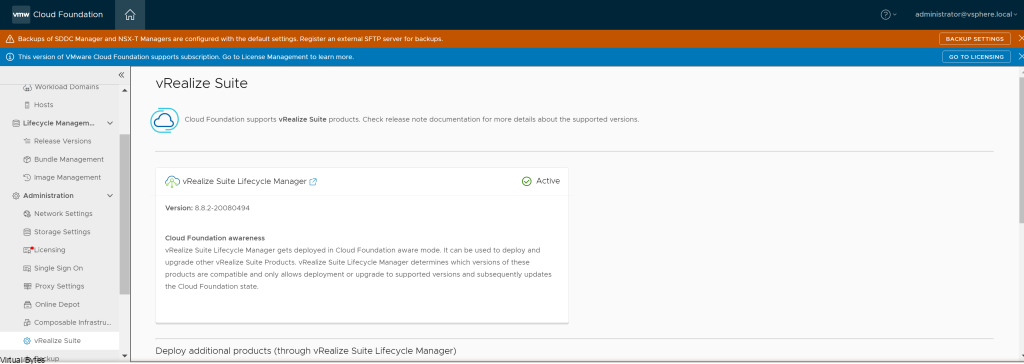

GUI –

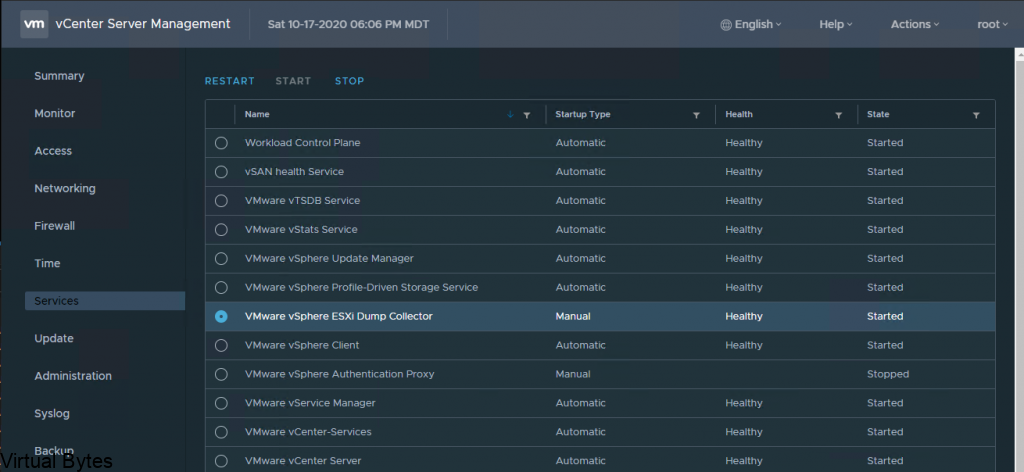

- Log into VAMI, Go to Services ->

- Select – VMware vCenter High Availability -> Set Start Up Type Manual ( still to verify execute the command above to disable and ensure it is disabled)

Once, rebooted or re-verted snapshot and all previous steps are completed, you should have a successful upgrade of your vCSA!